SkatterBencher #61: Intel Xeon w5-3435X Overclocked to 5200 MHz

We overclock the Intel Xeon w5-3435X up to 5200 MHz with the ASUS Pro WS W790E-Sage SE motherboard and EK-Pro water cooling.

We’ll do this by individually adjusting each P-core’s maximum allowed Turbo Ratio and core voltage. I’ll also cover more broadly the basics of Sapphire Rapids overclocking. But first, let’s look at the hardware we’re overclocking today. All right, we have lots to cover, so let’s jump straight in.

Intel Sapphire Rapids: Introduction

The Intel Xeon w5-3435X processor is part of Intel’s 4th generation Xeon Scalable processor line-up, formerly known as Sapphire Rapids-112L and Sapphire Rapids-64L.

Sapphire Rapids is the successor to, well, a variety of architectures. On the 4S/8S server side, it’s the successor to the 2020 14nm Cooper Lake. On the 1S/2S server and workstation side, it’s the successor to the 2021 10nm Ice Lake. And on the high-end desktop (HEDT) side, it’s the successor to the 2019 14nm Cascade Lake.

Enthusiasts like myself can think of the Sapphire Rapids W790 platform as the successor of the overclockable Cascade Lake-X and locked Cascade Lake-W processors. Perhaps the real spiritual predecessor of the unlocked Xeon W-2400 and W-3400 series is the overclockable 28-core Xeon W-3175X, launched in 2018.

Intel spoke at length about Sapphire Rapids during the 2021 Architecture Day. I won’t go over the architecture details, but it suffices to say there are some significant improvements over the Ice Lake, Cooper Lake, and Cascade Lake architectures.

The most significant improvements are the Intel 7 process technology and up to 56 Golden Cove P-cores. That makes Alder Lake the equivalent on mainstream desktop. It also features PCIe 5.0, DDR5 EEC RDIMM support, and Intel’s 3rd generation Deep Learning Boost technology. Lastly, Sapphire Rapids transitions from a single monolithic die design to a multi-tile design for increased scalability. Well, sort of. Only the Xeon W-3400 series uses the multi-tile die design, whereas the Xeon W-2400 segment still features a monolithic die.

And that’s not where the difference between the W-2400 and W-3400 segment ends.

While the W-3400 series go up to 56 P-cores, the W-2400 only goes up to 24 P-cores. The W-3400 series supports 8-channel memory, whereas the W-2400 series only supports 4-channel memory. The W-3400 series also supports 112 PCI-e 5.0 lanes, whereas the W-2400 series only support 64 lanes.

Intel further segments the Sapphire Rapids CPUs according to the Xeon w3, w5, w7, and w9 brands. That’s similar to how we have Core i3 to Core i9 on the mainstream desktop. Xeon w9 is reserved exclusively for the W-3400 series, and you can only find Xeon w3 processors in the Xeon W-2400 product line. Xeon w5 and w7 are available in both series.

Across all Sapphire Rapids Workstation products, eight overclockable SKUs are split evenly between the W-2400 and W-3400 segments. We’ll get back to how overclocking is enabled later in this video. It suffices to say that the Xeon w5-3435X we’re overclocking in this video is the entry-level overclockable SKU in the W-3400 line-up.

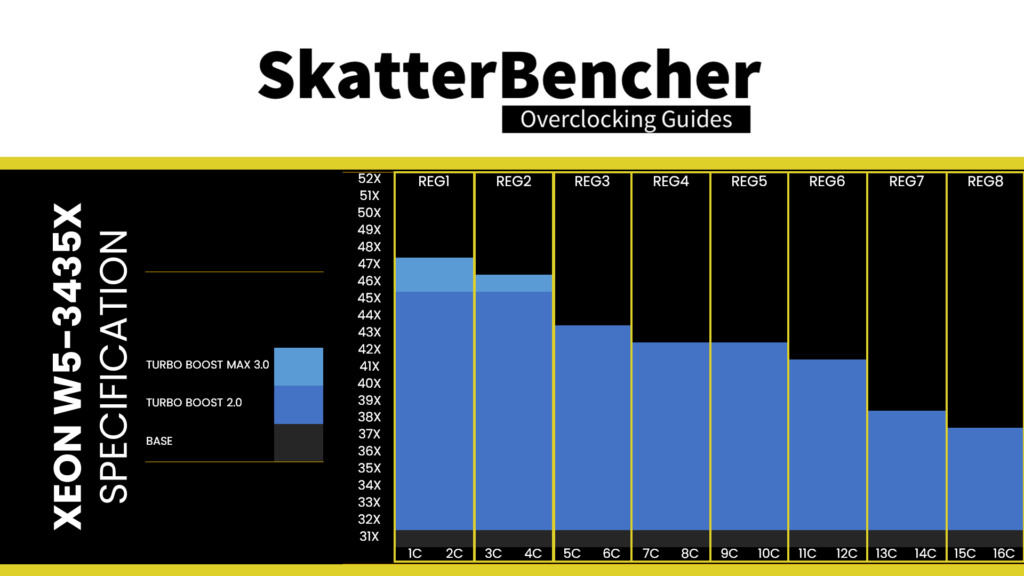

The Xeon w5-3435X has 16 P-cores with 32 threads. The base frequency is 3.1 GHz, the Turbo Boost 2.0 boost frequency is 4.5 GHz, and the Turbo Boost Max 3.0 boost frequency is 4.7 GHz. The maximum boost frequency gradually decreases from 4.7 GHz for up to 2 active cores to 3.7 GHz when all cores are active. The base TDP is 270W, and the Turbo TDP is 324W. The TjMax is 98 degrees Celsius.

In this video, we will cover four overclocking strategies:

- First, we rely on ASUS MCE and ASUS Memory Presets

- Second, we use the ASUS water-cooled OC preset

- Third, we try a static manual overclock

- Lastly, we go for a dynamic manual overclock

However, before we jump into overclocking, let us quickly review the hardware and benchmarks used in this video.

Intel Xeon w5-3435X: Platform Overview

The system we’re overclocking today consists of the following hardware.

| Item | SKU | Price (USD) |

| CPU | Intel Xeon w5-3435X | 1,599 |

| Motherboard | ASUS Pro WS W790E-Sage | 1,300 |

| CPU Cooling | EK-Pro CPU WB 4677 Ni + Acetal Prototype EK-Quantum Kinetic FLT 240 D5 EK-Quantum Surface P480M – Black | 134 221 150 |

| Fan Controller | ElmorLabs EFC-SB ElmorLabs EVC2SE | 20 35 |

| Memory | V-Color TR51640S840 | |

| Power Supply | Cooler Master V1200 Platinum | 270 |

| Graphics Card | ASUS ROG Strix RTX 2080 TI | 880 |

| Storage | AORUS RGB 512 GB M.2-2280 NVME | 120 |

| Chassis | Open Benchtable V2 | 200 |

ElmorLabs EFC-SB & EVC2

The Easy Fan Controller SkatterBencher Edition (EFC-SB) is a customized EFC resulting from a collaboration between SkatterBencher and ElmorLabs.

I explained how I use the EFC-SB in a separate video on this channel. By connecting the EFC-SB to the EVC2 device, I monitor the ambient temperature (EFC), water temperature (EFC), and fan duty cycle (EFC). I include the measurements in my Prime95 stability test results.

I also use the ElmorLabs EFC-SB to map the radiator fan curve to the water temperature. Without going into too many details: I have attached an external temperature sensor from the water in the loop to the EFC-SB. Then, I use the low/high setting to map the fan curve from 25 to 40 degrees water temperature. I use this configuration for all overclocking strategies.

The main takeaway from this configuration is that it gives us a good indicator of whether the cooling solution is saturated.

Intel Xeon w5-3435X: Benchmark Software

We use Windows 11 and the following benchmark applications to measure performance and ensure system stability.

| BENCHMARK | LINK |

| SuperPI 4M | https://www.techpowerup.com/download/super-pi/ |

| Geekbench 6 | https://www.geekbench.com/ |

| Cinebench R23 | https://www.maxon.net/en/cinebench/ |

| CPU-Z | https://www.cpuid.com/softwares/cpu-z.html |

| V-Ray 5 | https://www.chaosgroup.com/vray/benchmark |

| AI-Benchmark | https://ai-benchmark.com/ |

| Y-Cruncher | http://www.numberworld.org/y-cruncher/ |

| Blender Monster | https://opendata.blender.org/ |

| Blender Classroom | https://opendata.blender.org/ |

| 3DMark CPU Profile | https://www.3dmark.com/ |

| 3DMark Night Raid | https://www.3dmark.com/ |

| Nero Score | https://store.steampowered.com/app/1942030/Nero_Score__PC_benchmark__performance_test/ |

| Handbrake | https://handbrake.fr/ |

| CS:GO FPS Bench | https://steamcommunity.com/sharedfiles/filedetails/?id=500334237 |

| Shadow of the Tomb Raider | https://store.steampowered.com/app/750920/Shadow_of_the_Tomb_Raider_Definitive_Edition/ |

| Final Fantasy XV | http://benchmark.finalfantasyxv.com/na/ |

| Prime 95 | https://www.mersenne.org/download/ |

Y-Cruncher 25B

Y-Cruncher is a program that can compute Pi and other constants to trillions of digits. Since its launch in 2009, it has become a common benchmarking and stress-testing application for overclockers and hardware enthusiasts.

Regular viewers will know I often include the Y-Cruncher benchmark when overclocking high core-count CPUs. I also recently featured it in my Xeon w7-2495X overclocking guide. I just wanted to highlight that I’m using a different test parameter in this guide. Instead of 10B, I’m using 25B. The reason is simple: 25B requires 115GB of memory, which I have available on this Xeon w5-3435X system (in octo-channel configuration) but didn’t on the Xeon w7-2495X system. Hence I had to use the 10B preset, which only requires 46.5 GB of memory.

Xeon w5-3435X: Stock Performance

Before starting overclocking, we must check the system performance at default settings. Note that on this motherboard, Turbo Boost 2.0 is unleashed by default. So, to check the performance at default settings, you must enter the BIOS and

- Go to the Ai Tweaker menu

- Set ASUS MultiCore Enhancement to Disabled – Enforce All limits

Then save and exit the BIOS.

The default Turbo Boost 2.0 parameters for the Xeon w5-3435X are as follows:

- PL1: 270W

- PL2: 324W

- Tau: 67sec

- ICCIN_MAX: 485A

- ICIN_VR_TDC: 165A

- PMAX: 824W

- VTRIP: 1.6355V

Here is the benchmark performance at stock:

- SuperPI 4M: 37.406 seconds

- Geekbench 6 (single): 2,268 points

- Geekbench 6 (multi): 16,662 points

- Cinebench R23 Single: 1,685 points

- Cinebench R23 Multi: 29,282 points

- CPU-Z V17.01.64 Single: 691.1 points

- CPU-Z V17.01.64 Multi: 12,324.9 points

- V-Ray 5: 21,368 vsamples

- AI Benchmark: 6,800 points

- Y-Cruncher PI MT 25B: 637.736 seconds

- Blender Monster: 198.80 fps

- Blender Classroom: 97.46 fps

- 3DMark Night Raid: 58,810 points

- Nero Score: 2,690 points

- Handbrake: 40.81 fps

- CS:GO FPS Bench: 565.97 fps

- Tom Raider: 191 fps

- Final Fantasy XV: 202.36 fps

Here are the 3DMark CPU Profile scores at stock

- CPU Profile 1 Thread: 906

- CPU Profile 2 Threads: 1,778

- CPU Profile 4 Threads: 3,212

- CPU Profile 8 Threads: 5,733

- CPU Profile 16 Threads: 10,809

- CPU Profile Max Threads: 12,367

When running Prime 95 Small FFTs with AVX2 enabled, the average CPU effective clock is 3262 MHz with 0.884 volts. The average CPU temperature is 43.0 degrees Celsius. The ambient and water temperature is 26.9 and 30.5 degrees Celsius. The average CPU package power is 269.9 watts.

When running Prime 95 Small FFTs with AVX disabled, the average CPU effective clock is 3553 MHz with 0.918 volts. The average CPU temperature is 45.0 degrees Celsius. The ambient and water temperature is 27.4 and 30.7 degrees Celsius. The average CPU package power is 269.6 watts.

Now, let us try our first overclocking strategy.

However, before we get going, make sure to locate the CMOS Clear button

Pressing the Clear CMOS button will reset all your BIOS settings to default which is helpful if you want to start your BIOS configuration from scratch. However, it does not delete any of the BIOS profiles previously saved. The Clear CMOS button is located on the rear I/O panel.

OC Strategy #1: MCE + Memory OC

In our first overclocking strategy, we use ASUS MultiCore Enhancement to unleash the Turbo Boost 2.0 power limits and use ASUS memory presets.

Turbo Boost 2.0

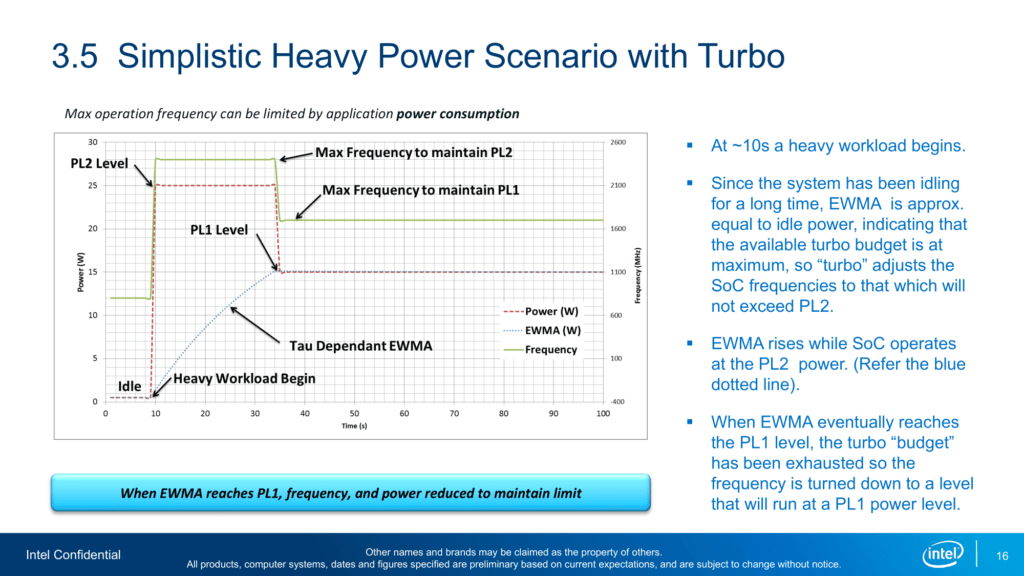

Intel Turbo Boost 2.0 Technology allows the processor cores to run faster than the base operating frequency. Turbo Boost is available when the processor works below its rated power, temperature, and current specification limits. The ultimate advantage is opportunistic performance improvements in both multi-threaded and single-threaded workloads.

The turbo boost algorithm works according to a proprietary EWMA formula. That stands for Exponentially Weighted Moving Average. There are three parameters to consider: PL1, PL2, and Tau.

- Power Limit 1, or PL1, is the threshold the average power won’t exceed. Historically, this has always been set equal to Intel’s advertised TDP. PL1 should not be set higher than the thermal solution cooling limits.

- Power Limit 2, or PL2, is the maximum power the processor can use for a limited time.

- Tau, in seconds, is the time window for calculating the average power consumption. The CPU will reduce the CPU frequency if the average power consumed exceeds PL1.

Turbo Boost 2.0 technology is available on Sapphire Rapids as it’s the primary driver of performance over the base frequency.

An easy ASUS MultiCore Enhancement option on ASUS motherboards allows you to unleash the Turbo Boost power limits. Set the option to Enabled – Remove All Limits and enjoy maximum performance.

Adjusting the power limits is strictly not considered overclocking, as we don’t change the CPU’s thermal, electrical, or frequency parameters. Intel provides the Turbo Boost parameters as guidance to motherboard vendors and system integrators to ensure their designs enable the base performance of the CPU. Better motherboard designs, thermal solutions, and system configurations can facilitate peak performance for longer.

ASUS Memory Presets

ASUS Memory Presets is an ASUS overclocking technology that provides a selection of memory-tuning presets for specific memory ICs. The presets will adjust the memory timings and voltages.

The technology was first introduced in 2012 on Z77 and has been on select ASUS ROG motherboards ever since. The memory profiles available differ from platform to platform. On the Maximus V Extreme, for example, there were no less than 14 profiles available for various ICs and memory configurations.

Four memory profiles are available on the ASUS Pro WS W790E-Sage SE motherboard, two each for Hynix and Micron. Since our memory can overclock pretty well, we use the profile for Hynix DDR5-6800 memory. However, we manually set the memory frequency to DDR5-6600 since this Xeon w5-3435X wasn’t able to run 6800.

BIOS Settings & Benchmark Results

Upon entering the BIOS

- Go to the Ai Tweaker menu

- Set ASUS MultiCore Enhancement to Enabled – Remove All limits

- Set DRAM Frequency to DDR5-6600MHz

- Enter the DRAM Timing Control submenu

- Enter the Memory Presets submenu

- Select Load Hynix 6800 1.4V 8x16GB SR

- Select Yes

- Enter the Memory Presets submenu

Then save and exit the BIOS.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- SuperPI 4M: +1.02%

- Geekbench 6 (single): +2.82%

- Geekbench 6 (multi): +4.14%

- Cinebench R23 Single: +2.37%

- Cinebench R23 Multi: +1.19%

- CPU-Z V17.01.64 Single: +1.69%

- CPU-Z V17.01.64 Multi: +0.04%

- V-Ray 5: +2.62%

- AI Benchmark: +2.66%

- Y-Cruncher PI MT 25B: +11.03%

- Blender Monster: +1.72%

- Blender Classroom: +1.83%

- 3DMark Night Raid: +0.71%

- Nero Score: +5.72%

- Handbrake: +1.37%

- CS:GO FPS Bench: +0.03%

- Tom Raider: +1.57%

- Final Fantasy XV: +0.57%

Here are the 3DMark CPU Profile scores

- CPU Profile 1 Thread: +0.00%

- CPU Profile 2 Threads: +0.28%

- CPU Profile 4 Threads: +0.09%

- CPU Profile 8 Threads: +0.58%

- CPU Profile 16 Threads: +1.61%

- CPU Profile Max Threads: +0.11%

After unleashing the Turbo Boost 2.0 power limits and increasing the memory frequency, the performance barely improves, except in highly memory-sensitive workloads, which benefit from overclocking the memory from DDR5-4800 to DDR5-6600. We see the highest performance improvement of +11.03% in Y-Cruncher.

We don’t see a more significant impact from unleashing the Turbo Boost power limits because we’re primarily frequency limited. The standard frequency for an all-core workload is only 3.7 GHz, so the frequency won’t boost beyond that despite unleashing the power limit.

When running Prime 95 Small FFTs with AVX2 enabled, the average CPU effective clock is 3386 MHz with 0.896 volts. The average CPU temperature is 47.0 degrees Celsius. The ambient and water temperature is 26.8 and 31.4 degrees Celsius. The average CPU package power is 342.4 watts.

When running Prime 95 Small FFTs with AVX disabled, the average CPU effective clock is 3537 MHz with 0.915 volts. The average CPU temperature is 46.0 degrees Celsius. The ambient and water temperature is 26.9 and 31.1 degrees Celsius. The average CPU package power is 310.6 watts.

OC Strategy #2: Water-Cooled OC Preset

In our second overclocking strategy, we use the Water-Cooled OC Preset available in the BIOS. However, the preset usage isn’t as straightforward as with the Xeon w7-2495X in SkatterBencher #59. So, before I explain what the preset does, I want to present a couple of fundamental Intel overclocking technologies: Turbo Boost 2.0, Turbo Boost Max 3.0, and Intel Adaptive Voltage.

Turbo Boost 2.0 Ratio Configuration

We all know the Turbo Boost 2.0 technology from its impact on the power limits, but a second significant aspect of Turbo Boost 2.0 is configuring the CPU frequency based on the number of active cores.

Turbo Boost 2.0 Ratio Configuration allows us to configure the overclock for different scenarios ranging from 1 active core to all active cores. That enables us to run some cores significantly faster than others when the conditions are right. Intel provides eight (8) registers to configure the Turbo Boost 2.0 Ratio.

On mainstream platforms where the top SKU has no more than 8 P-cores, these registers are configured from 1-active P-core to 8-active P-cores. However, on platforms with core counts beyond eight cores, we can configure each register by target Turbo Boost Ratio and the number of active cores.

For example, the standard and ASUS’ water-cooled OC preset Turbo Boost Ratio Configuration of the Xeon w5-3435X is as follows.

- 47X up to 2 active cores -> 47X up to 2 active cores

- 46X up to 4 active cores -> 47X up to 4 active cores

- 43X up to 6 active cores -> 46X up to 8 active cores

- 42X up to 8 active cores -> 46X up to 12 active cores

- 42X up to 10 active cores -> 46X up to 16 active cores

- 41X up to 12 active cores -> 45X up to 20 active cores

- 38X up to 14 active cores -> 44X up to 24 active cores

- 37X up to 16 active cores -> 40X up to 128 active cores

By Core Usage is not the same as configuring each core individually. When using By Core Usage, we determine an overclock according to the actual usage. For example, if a workload uses four cores, the CPU determines which cores should execute this workload and applies our set frequency to those cores.

Turbo Boost Max 3.0 Technology

In 2016, Intel introduced the Turbo Boost Max Technology 3.0. While carrying the same name, Turbo Boost Max 3.0 is not an iteration of Turbo Boost 2.0.

Turbo Boost Max Technology 3.0 aims to exploit the natural variance in CPU core quality observed in multi-core CPUs. With Turbo Boost Max 3.0, Intel identifies the best cores in your CPU and calls those the “favored cores.” The favored cores are essential for two reasons.

- First, Intel allows for additional frequency boosts of the favored cores. On the Sapphire Rapids Xeon w5-3435X, there are four favored P-cores. Two can boost to 4.6 GHz, and two can boost to 4.7 GHz. The rest of the non-favored cores are limited to 4.5 GHz.

- Second, the operating system will automatically assign the most demanding workloads to these favored cores, ensuring potentially higher performance.

The performance benefit of ITBMT 3.0 is most visible in low thread count workloads. Highly threaded workloads do not benefit from ITBMT 3.0.

Sapphire Rapids Adaptive Voltage Mode

Like any previous Intel architecture, there are two main ways of configuring the voltage for the CPU cores: override mode and adaptive mode.

- Override mode specifies a single static voltage across all ratios. It is mainly used for extreme overclocking where stability at high frequencies is the only consideration.

- Adaptive mode is the standard mode of operation. In Adaptive Mode, the CPU relies on the factory-fused voltage-frequency curves to set the appropriate voltage for a given ratio. When configuring an adaptive voltage, it is mapped against the “OC Ratio, ” the highest configured ratio. We’ll get back to that in a minute.

Since Sapphire Rapids uses FIVR, we can only adjust the core voltage by configuring the CPU PCU via BIOS or specialized tools like XTU.

We can specify a voltage offset for override and adaptive modes. Of course, this doesn’t make much sense for override mode – if you set 1.15V with a +50mV offset, you could just set 1.20V – but it can be helpful in adaptive mode as you can offset the entire V/F curve by up to 500mV in both directions.

On Sapphire Rapids, you can configure the override or adaptive voltage on a Global or Per-Core level. Let’s focus on adaptive mode voltage configuration and first look at how it works for a single core.

When we set an adaptive voltage for a core, this voltage is mapped against the “OC Ratio.” The “OC Ratio” is the highest ratio configured for the CPU across all settings and cores. When you leave everything at default, the OC ratio is determined by the default maximum turbo ratio. In the case of the Xeon w5-3435X, that ratio would be 47X because of the Turbo Boost Max 3.0. The “OC Ratio” equals the highest configured ratio if you overclock.

Specific rules govern what adaptive voltage can be set.

A) the voltage set for a given ratio n must be higher than or equal to the voltage set for ratio n-1.

Suppose our Xeon w5-3435X runs 47X at 1.20V. In that case, setting the adaptive voltage, mapped to 47X, lower than 1.20V, is pointless. 47X always runs at 1.20V or higher. Usually, BIOSes may allow you to configure lower values. However, the CPU’s internal mechanisms will override your configuration if it doesn’t follow the rules.

B) the adaptive voltage configured for any ratio below the maximum default turbo ratio will be ignored.

Take the same example of the Xeon w5-3435X, specified to run 47X at 1.20V. If you try to configure all cores to 45X and set 1.10V, the CPU will ignore this because it has its own factory-fused target voltage for all ratios up to 47X and will use this voltage. You can only change the voltage of the OC Ratio, which, as mentioned before, on the Xeon w5-3435X, is 47X and up.

C) for ratios between the OC Ratio and the next highest factory-fused V/f point, the voltage is interpolated between the set adaptive voltage and the factory-fused voltage.

Returning to our example of a Xeon w5-3435X specified to run 47X at 1.20V, let’s say we manually configure the OC ratio to be 52X at 1.35V. The target voltage for ratios 51X, 50X, 49X, and 48X will now be interpolated between 1.20V and 1.35V.

As I mentioned already, we can do this for each core individually. However, that would be rather painful, especially on a 56-core CPU! Fortunately, there’s also an alternative way to set a global adaptive voltage.

When we set a global adaptive voltage, it maps this voltage to the OC Ratio for each core in our CPU. So, if our OC Ratio is 52X and the global adaptive voltage is 1.35V, then every core in our CPU has a voltage frequency curve that goes up to 52X at 1.35V. That certainly makes things easier.

One last note: we can also configure a Per-Core Ratio Limit. Counter-intuitively, this Ratio doesn’t act as a core-specific OC Ratio but as a means to limit what parts of the V/F curve can be used. Let’s use that same example of the 52X at 1.35V. If we set the Per-Core Ratio Limit to 51X, the CPU core will boost up to 5.1 GHz at a voltage interpolated between 52X at 1.35V and 48X at 1.25V.

Sapphire Rapids Per Core Ratio & Voltage Control

While we only recently saw the addition of per-core ratio control on mainstream desktop with Rocket Lake, on the high-end desktop, the ability to control the maximum ratio and voltage for each core has been around since Broadwell-E in 2016.

The Per Core Ratio and Voltage control options let you control the upper end of the voltage-frequency curve of each core inside your CPU. While the general rules of adaptive voltage mode still apply, this enables two crucial new avenues for CPU overclocking.

- First, it allows users to overclock each core and individually find its maximum stable frequency.

- Second, it allows users to set an aggressive by core usage overclock while constraining the worst cores.

Since each core has an independent FIVR-regulated power rail, it’s possible to finetune each core to its maximum capability.

Water-Cooled OC Preset

The water-cooled OC preset is an excellent addition to the ASUS Pro WS W790 motherboards, giving Xeon customers an easy path to additional performance. We can enable the preset with a single button click. The preset drastically improves the all-core performance by changing the Turbo Boost 2.0 Ratio configuration. Furthermore, the preset also adjusts the per-core ratio limit.

On this Xeon w5-3435X, for example, by enabling the preset, the all-core frequency increases by almost 1 GHz from 3.7 GHz to 4.6 GHz. In addition, it increases the maximum CPU ratio for all cores by +1, except for two “super”-favored cores. As a result, all cores can now boost to 4.6 GHz, and four cores can boost to 4.7 GHz.

While it aims to do this without any voltage adjustments, it inadvertently does so for a bizarre reason.

Remember what I said about the Turbo Boost 2.0 and Turbo Boost Max 3.0 ratio configuration? Two cores are specified to run up to 4.7 GHz, two cores can run up to 4.6 GHz, and the rest of the 12 cores can only run up to 4.5 GHz. The expectation is that Intel has, for each core, factory-fused a specific voltage for its maximum ratio. When you increase the ratio without adjusting the adaptive voltage, it will use the voltage for its highest V/F point.

Strangely enough, that’s not the case for the twelve non-favored cores. As you can see from this chart, each of the twelve non-favored cores has a factory-fused V/F point for 4.7 GHz … and at 1.37V, it’s really high!

In practical terms, as we learned from the adaptive voltage explanation, that means it’s not possible to control the adaptive voltage for any of the non-favored cores below 4.7 GHz. Or, put differently, every non-favored core will run at an increased voltage when boosting to 4.6 GHz.

That will significantly impact when we try to use the adaptive voltage later in this video. At the moment, it suffices to say that the water-cooled OC preset inadvertently increases the maximum voltage for all non-favored cores from about 1.12V to 1.24V when up to all 16 cores are active.

BIOS Settings & Benchmark Results

Upon entering the BIOS

- Go to the Ai Tweaker menu

- Set Ai Overclock Tuner to XMP I

- Set ASUS MultiCore Enhancement to Enabled – Remove All limits

- Set CPU Core Ratio to Water-Cooled OC Preset

- Set DRAM Frequency to DDR5-6600MHz

- Enter the DRAM Timing Control submenu

- Enter the Memory Presets submenu

- Select Load Hynix 6800 1.4V 8x16GB SR

- Select Yes

- Enter the Memory Presets submenu

Then save and exit the BIOS.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- SuperPI 4M: +6.76%

- Geekbench 6 (single): +7.05%

- Geekbench 6 (multi): +22.13%

- Cinebench R23 Single: +7.48%

- Cinebench R23 Multi: +24.41%

- CPU-Z V17.01.64 Single: +9.29%

- CPU-Z V17.01.64 Multi: +24.00%

- V-Ray 5: +22.45%

- AI Benchmark: +25.60%

- Y-Cruncher PI MT 25B: +31.96%

- Blender Monster: +24.57%

- Blender Classroom: +25.85%

- 3DMark Night Raid: +17.83%

- Nero Score: +15.80%

- Handbrake: +28.64%

- CS:GO FPS Bench: +1.34%

- Tom Raider: +4.71%

- Final Fantasy XV: +1.27%

Here are the 3DMark CPU Profile scores

- CPU Profile 1 Thread: +11.59%

- CPU Profile 2 Threads: +12.94%

- CPU Profile 4 Threads: +20.39%

- CPU Profile 8 Threads: +23.88%

- CPU Profile 16 Threads: +32.96%

- CPU Profile Max Threads: +31.38%

By enabling the Water-Cooled OC Preset, we significantly increase the all-core frequency. That greatly improved performance as we also unleashed the Turbo Boost 2.0 power limits. We see a maximum performance improvement of +32.96% in 3DMark CPU Profile 16t Threads.

When running Prime 95 Small FFTs with AVX2 enabled, the average CPU effective clock is 4537 MHz with 1.226 volts. The average CPU temperature is 97.0 degrees Celsius. The ambient and water temperature is 27.8 and 36.0 degrees Celsius. The average CPU package power is 613.0 watts.

When running Prime 95 Small FFTs with AVX disabled, the average CPU effective clock is 4580 MHz with 1.226 volts. The average CPU temperature is 85.0 degrees Celsius. The ambient and water temperature is 27.3 and 34.1 degrees Celsius. The average CPU package power is 495.7 watts.

OC Strategy #3: Simple Fixed Overclock

In our third overclocking strategy, we pursue a manual overclock. I am not known for advocating for a simple all-core overclock, especially for mainstream platforms. And that’s because you tend to lose out on lots of performance headroom in single-threaded or light workloads. However, I wanted to give this approach another shot for Sapphire Rapids.

Before I show you my BIOS settings, let’s first look at the Sapphire Rapids Clocking and Voltage Topology. Please note that there’s not much public information on the topology. So most of the information I provide is inferred from my testing and the ASUS team’s help.

Sapphire Rapids Clocking Topology

The clocking of a standard Sapphire Rapids platform slightly differs from what we’re used to with mainstream platforms. While technically, Sapphire Rapids should support a 25 MHz Crystal input to the PCH and then have the PCH generate the rest of the clocks, this is not the standard method of operation and is not officially supported.

The supported clocking topology relies on a 25 MHz crystal or crystal oscillator input to an external CK440Q clock generator which then connects to one or more DB2000Q differential buffer devices. The platform supports multiple clocking topologies: balanced and unbalanced.

- Balanced: All CPU BCLKs and PCIe reference clocks are driven by the same DB or different DBs at the same depth levels

- Unbalanced:

- CPU BCLKs are driven by DB and PCIe by the extCLK/PCHCLK or other DB

- Vice versa

The specific implementation depends on your choice of motherboard. Ideally, we would isolate the CPU BCLK from any PCIe reference clocks. However, it seems that this unbalanced architecture is currently not working very well. So you’ll likely see all motherboards adopting a balanced clocking architecture. That means if you increase the CPU BCLK, you also increase the CPU PCIe clock frequency.

Either way, the external clock generator generates multiple 100 or 25 MHz clock sources. These sources can be used for:

- 100 MHz CPU base clock frequency

- 100 MHz CPU PCIe clock frequency

- 100 MHz PCH PCIe clock frequency

- 100 MHz NIC clock frequency

- 100 MHz clock input for the PCH

The 100 MHz CPU BCLK is then multiplied with specific ratios for each of the different parts in the CPU.

Each P-core can run at its independent frequency. The maximum CPU ratio is 120X. However, the maximum all-core ratio is limited to 52X on multi-tile die CPUs. I’ll get back to that in a minute.

The Mesh PLL ties together the last-level cache, cache box, and seemingly also the memory controller. It can run an independent frequency from the P-cores. On monolithic dies of the W-2400 processors, the Mesh ratio is limited to 80X. However, on the multi-tile dies of the W-3400 processors, the Mesh ratio is limited to 27X.

The memory frequency is also driven by the CPU BCLK and multiplied by a memory ratio. Unlike on mainstream desktop, the memory frequency is not tied to the memory controller frequency and can run independently. The memory ratio goes up to 88X or a frequency of up to DDR5-8800.

There are a couple of noteworthy oddities with CPU core clocking on Sapphire Rapids.

- While the per-core maximum ratio is 120X, the Turbo Boost 2.0 ratio limit for 1-active core is 117X.

- On multi-tile die Sapphire Rapids variants, the Turbo Boost 2.0 all-core maximum allowed CPU ratio is 52X. Effectively, you must increase the BCLK frequency with the 52X maximum ratio to break all-core world records. This all-core ratio limit is not present on the monolithic die variants.

- The CPU has similar FLL OC issues as on early Alder Lake platforms. In short, a bug appears to allow a ratio to be programmed to the CPU PLL even though the actual effective frequency is lower. That may cause you to see reported frequencies much higher than reasonable. However, the CPU performance in benchmark applications isn’t affected, so the benchmark performance reflects the real effective frequency.

- Building on the previous point, specific CPU cores appear to have different points at which the FLL bug occurs. So for record-chasing attempts, you may try to find the cores in your CPU with the highest FLL range and only use those for benchmarking.

For the extreme overclockers out there: Sapphire Rapids CPUs typically cold bug between negative 90 and 120 degrees Celsius, likely due to the FIVR.

Sapphire Rapids Voltage Topology

Sapphire Rapids uses a combination of fully integrated voltage regulators (FIVR) and motherboard voltage regulators (MBVR) for power management. There are eight (8) distinct voltage inputs to a Sapphire Rapids processor—most of these power inputs power a FIVR or fully integrated voltage regulator. The FIVR then manages the voltage provided to specific parts of the CPU. The end user can control some of these voltages.

Unfortunately, it’s unclear which FIVRs control what end-user configurable power domains. I did my best to assemble the information, but please bear in mind that the following overview may not be entirely accurate.

VccIN is the primary power source for the CPU. It provides the input power for the FIVR, which in turn provides power to each P-core individually and the combined Mesh and last-level cache. The default voltage is 1.8V. Through Intel’s overclocking toolkit, we have access to up to 57 power domains:

- VccCOREn provides the voltage to up to 56 individual P-core.

- VccMESH provides the voltage to the mesh and last-level cache

VccINFAON provides the input power for those parts of the CPU that should always be on. INF stands for “infrastructure,” and AON stands for “always-on.” The power domains include the FIVRs needed for initializing the CPU during boot-up. The default voltage is 1.0V.

VccFA_EHV provides the input power for the PCIe 5.0, UPI I/O, and all other FIVR power domains. The default voltage is 1.0V. Through Intel’s overclocking toolkit, we have access to two power domains:

- VccCFN provides the power for the on-die Coherent Fabric (CF), which provides the means of communication between the various components inside the die or tile. Each module on the die, whether the core, memory controller, io, or accelerator, contains an agent providing access to the CF. The default voltage is 0.7V.

- VccMDFI provides the power for the Multi-Die Fabric Interconnect, which extends the Coherent Fabric across multiple dies. The default voltage is 0.5V

VccFA_EHV_FIVRA provides the input power for the analog IO FIVR domains and the core power for the on-package HBM in Sapphire Rapids SKUs with HBM. The default voltage is 1.8V. Through Intel’s overclocking toolkit, we have access to two power domains:

- VccIO provides the power for all IO modules on the die. The default voltage is 1.0V.

- VccMDFIA provides the power for the analog parts of the Multi-Die Fabric Interconnect. The default voltage is 0.9V.

VccD_HV provides the power source for the DDR5 memory controllers. These voltages are not shared with the DDR5 memory. The default voltage is 1.1V. Through Intel’s overclocking toolkit, we have access to two power domains:

- VccDDRD, possibly the memory controller core voltage, which defaults at 0.7V

- VccDDRA, possibly the memory controller side I/O voltage, which defaults at 0.9V

VNN provides the power for the CPU GPIO and on package devices. The default voltage is 1.0V.

3V3_AUX provides power for some on-package devices such as the PIROM. The default voltage is 3.3V.

VPP_HBM provides the charge pump voltage for the on-package HBM on Sapphire Rapids CPUs with HBM. The default voltage is 2.5V.

Sapphire Rapids AVX2, AVX-512, and TMUL Ratio Offset

Intel first introduced the AVX negative ratio offset on Broadwell-E processors. Successive processors adopted this feature and eventually expanded it with AVX2 and AVX-512 negative offsets. New on Sapphire Rapids is the addition of the TMUL ratio offset. TMUL stands for Tile matrix MULtiply and is an Intel Advanced Matrix Extensions (AMX) technology component. It’s designed to accelerate AI and deep learning workloads.

The ratio offsets help achieve maximum performance for SSE, AVX, and AMX workloads.

While in the past, the ratio offsets were triggered by detecting specific AVX instructions, since Skylake, Intel has implemented a more elegant frequency license-based approach. The four frequency levels are L0, L1, L2, and L3. Each level is associated with particular instructions ranging from lightest to heaviest. Each level can also be associated with one specific ratio offset.

L0 is associated with the lightest workload, and the frequency matches the maximum Per Core ratio limit. L1 is related to the AVX2 ratio offset, and L2 is associated with the AVX-512 ratio offset. The TMUL ratio offset adds another L3 frequency license on top of that.nAs a rule, the ratio offset configured for a given frequency license must be equal to or higher than the preceding frequency license. In other words: L0 = 0 ≤ L1 ≤ L2 ≤ L3.

Since Ice Lake, the ratio offset is applied on a per-core basis. The ratio offset is subtracted from the core-specific ratio limit but is still subject to the other Turbo Boost ratio configuration rules.

I didn’t have sufficient time yet to look too deeply into the specific ratio offset behavior for Sapphire Rapids, so I’ll have to get back to this topic in future videos.

Xeon w5-3435X Simple Fixed OC Manual Tuning

In this strategy, we’re pursuing a traditional CPU overclock, using one ratio and voltage for all CPU P-cores. The main limiting factor for this type of overclock is our worst-case scenario stability test: Prime95 Small FFTs with AVX2 enabled.

That may surprise some of you, as we’d expect the AVX-512 workload to be heavier. But as we demonstrated in the Xeon w7-2495X SkatterBencher guide, AVX2 produces a higher CPU package power with a higher CPU temperature.

The main limiting factor for the maximum frequency is not the core’s overclocking capabilities but the maximum voltage we can use in our worst-case scenario workload. The maximum allowed temperature for the Sapphire Rapids Xeon w5-3435X CPUs is 98 degrees Celsius. With the water-cooled OC preset, we already reached that temperature in the Prime95 AVX2 test.

Side note: I mistakenly implied in the previous SkatterBencher Sapphire Rapids guide that the maximum temperature is the same for each SKU. However, the maximum allowed temperature differs from SKU to SKU and ranges from 94C for the w7-2475X and w7-2495X to 100C for the w5-2455X

As you know, power scales exponentially with operating voltage. For example, a 10% increase in voltage on this CPU increases power consumption by about 21%. Ultimately, the operating voltage is the main limiting factor for our maximum frequency.

I found that for this CPU, the maximum voltage was around 1.21V. That was sufficient to set an all-core frequency of 4.9 GHz, up 200 MHz when up to 2 cores are active, and 1.2 GHz higher than stock when all 16 cores are active.

In addition to increasing the CPU core voltage, we slightly increase the VccIN voltage to 2.2V. That’s to make it easier on the VccIN VRM. After all, a higher voltage means a lower current at a given input power in Watts.

BIOS Settings & Benchmark Results

Upon entering the BIOS

- Go to the Ai Tweaker menu

- Set ASUS MultiCore Enhancement to Enabled – Remove All limits

- Set CPU Core Ratio to By Core Usage

- Enter the By Core Usage submenu

- Set Turbo Ratio Limit 1 to 49

- Set Turbo Ratio Cores 1 to 16

- Leave the By Core Usage submenu

- Set DRAM Frequency to DDR5-6600MHz

- Enter the AVX Related Controls submenu

- Set AVX2, AVX512, and TMUL Ratio Offset to per-core Ratio Limit to User Specify

- Set AVX2, AVX512, and TMUL Ratio Offset to 2

- Leave the AVX Related Controls submenu

- Enter the DRAM Timing Control submenu

- Enter the Memory Presets submenu

- Select Load Hynix 6800 1.4V 8x16GB SR

- Select Yes

- Enter the Memory Presets submenu

- Set Vcore 1.8V IN to Manual mode

- Set CPU Core Voltage Override to 2.2

- Set Global Core SVID Voltage to Manual Mode

- Set CPU Core Voltage Override to 1.215

Then save and exit the BIOS.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- SuperPI 4M: +12.10%

- Geekbench 6 (single): +12.21%

- Geekbench 6 (multi): +28.27%

- Cinebench R23 Single: +13.00%

- Cinebench R23 Multi: +33.03%

- CPU-Z V17.01.64 Single: +15.66%

- CPU-Z V17.01.64 Multi: +32.14%

- V-Ray 5: +32.26%

- AI Benchmark: +36.51%

- Y-Cruncher PI MT 10B: +35.70%

- Blender Monster: +32.33%

- Blender Classroom: +33.75%

- 3DMark Night Raid: +29.31%

- Nero Score: +17.96%

- Handbrake: +34.38%

- CS:GO FPS Bench: +4.63%

- Tom Raider: +6.81%

- Final Fantasy XV: +1.91%

Here are the 3DMark CPU Profile scores

- CPU Profile 1 Thread: +16.34%

- CPU Profile 2 Threads: +18.05%

- CPU Profile 4 Threads: +29.02%

- CPU Profile 8 Threads: +37.03%

- CPU Profile 16 Threads: +40.55%

- CPU Profile Max Threads: +33.01%

Running the Xeon w5-3435X at 4.9 GHz across the board represents an increase of 200 to 1200 MHz across the various scenarios. So, naturally, we expect significant performance gains in single-threaded and multi-threaded workloads. We get a maximum performance improvement of +40.55% in 3DMark CPU Profile 16 Threads.

When running Prime 95 Small FFTs with AVX2 enabled, the average CPU effective clock is 4690 MHz with 1.216 volts. The average CPU temperature is 95.0 degrees Celsius. The ambient and water temperature is 26.8 and 35.2 degrees Celsius. The average CPU package power is 609.2 watts.

When running Prime 95 Small FFTs with AVX disabled, the average CPU effective clock is 4742 MHz with 1.216 volts. The average CPU temperature is 82.0 degrees Celsius. The ambient and water temperature is 27.2 and 33.8 degrees Celsius. The average CPU package power is 498.5 watts.

OC Strategy #4: Simple Dynamic Overclock

In our final overclocking strategy, we pursue a modern, dynamic manual overclock. We must discuss Intel’s overclocking toolkit for Sapphire Rapids to explore how we can do this.

Sapphire Rapids Overclocking Toolkit

I described the history of Intel’s overclocking toolkit in a previous blog post titled: “How is Alder Lake Non-K Overclocking Even Possible?!” Long story short, Intel developed and maintained a technology called the OC Mailbox which contains the entire overclocker’s toolkit. This toolkit is not always the same for each CPU architecture, as sometimes we need different tools.

On Sapphire Rapids, the overclocking toolkit consists of the following tools:

- Per Core ratio and voltage control

- Mesh ratio and voltage control

- DRAM ratio control

- AVX2, AVX-512, and TMUL ratio offset

- Turbo Boost 2.0 ratio and power control

- Turbo Boost Max 3.0 ratio control

- SVID disable

- XMP 3.0 support

- XTU Support

Notably missing from the OC toolbox are prominent features we know from mainstream desktop like Advanced Voltage Offset, better known as V/F points, and OverClocking Thermal Velocity Boost, or OCTVB.

As demonstrated in my first Sapphire Rapids overclocking guide, setting up a dynamic overclock is tricky with these CPUs. There are two main reasons for that.

First, as I highlighted in OC Strategy #2, strangely enough, every core inside this Xeon w5-3435X has a factory-fused voltage frequency point up to 47X despite all but four cores being limited to 45X. Furthermore, the fused voltage for these cores is exceptionally high: 1.37V.

The unfortunate consequence of this situation is that there’s simply no way to use per-core adaptive voltage for any cores with this high factory-fused voltage. That’s because (a) the voltage is unreasonably high for any all-core loads and (b) due to the adaptive voltage rules, we cannot configure a lower voltage.

Second, even if we could set an adaptive voltage, the integrated voltage regulator has difficulty dealing with rapid transient loads at higher dynamic voltages.

Xeon w5-3435X Simple Dynamic OC Manual Tuning

For this OC strategy, I use a combination of per-core override voltage, per-core ratio limit, and custom Turbo Boost 2.0 ratio configuration.

The practical side of manual tuning is similar to the one from OC Strategy #3. It involves running several stability tests to find the maximum stable frequency for each core at its set per-core override voltage. I set a per-core override voltage ranging from 1.215V for most cores to 1.25V for a few cores.

I increased the maximum per-core ratio for each core with the additional voltage headroom. The maximum CPU ratio ranges from 48X for core 8 to 52X for core 2. Furthermore, I was able to increase the all-core frequency to 5.0 GHz. That means we’re now 1.3 GHz higher than the stock all-core frequency.

BIOS Settings & Benchmark Results

Upon entering the BIOS

- Go to the Ai Tweaker menu

- Set ASUS MultiCore Enhancement to Enabled – Remove All limits

- Set CPU Core Ratio to By Core Usage

- Enter the By Core Usage sub-menu

- Set Turbo Ratio Limit 1 to 52

- Set Turbo Ratio Cores 1 to 12

- Set Turbo Ratio Limit 2 to 51

- Set Turbo Ratio Cores 2 to 14

- Set Turbo Ratio Limit 3 to 50

- Set Turbo Ratio Cores 3 to 16

- Leave the By Core Usage sub-menu

- Enter the Specific Core submenu

- Set Core 0 Specific Ratio Limit to 51

- Set Core 1, 3, 5, 10, 12, and 15 Specific Ratio Limit to 50

- Set Core 2 Specific Ratio Limit to 52

- Set Core 4, 6, 7, 9, 11, 13, and 14 Specific Ratio Limit to 49

- Set Core 8 Specific Ratio Limit to 48

- For all Cores, set Core Specific Voltage to Manual Mode

- Set CPU Core 0, 2, and 12 Voltage Override to 1.25

- Set CPU Core 1, 3, 4, 5, 6, 7, 8, 9, 10, 11, 13, 14, and 15 Voltage Override to 1.215

- Leave the Specific Core submenu

- Set DRAM Frequency to DDR5-6600MHz

- Enter the AVX Related Controls submenu

- Set AVX2, AVX512, and TMUL Ratio Offset to per-core Ratio Limit to User Specify

- Set AVX2, AVX512, and TMUL Ratio Offset to 2

- Leave the AVX Related Controls submenu

- Enter the DRAM Timing Control submenu

- Enter the Memory Presets submenu

- Select Load Hynix 6800 1.4V 8x16GB SR

- Select Yes

- Enter the Memory Presets submenu

- Set Max. CPU Cache Ratio to 27

- Set Vcore 1.8V IN to Manual Mode

- Set CPU Core Voltage Override to 2.3

Then save and exit the BIOS.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- SuperPI 4M: +17.27%

- Geekbench 6 (single): +16.36%

- Geekbench 6 (multi): +29.76%

- Cinebench R23 Single: +20.36%

- Cinebench R23 Multi: +34.02%

- CPU-Z V17.01.64 Single: +19.81%

- CPU-Z V17.01.64 Multi: +33.39%

- V-Ray 5: +34.75%

- AI Benchmark: +41.04%

- Y-Cruncher PI MT 10B: +43.02%

- Blender Monster: +33.12%

- Blender Classroom: +34.57%

- 3DMark Night Raid: +29.61%

- Nero Score: +24.54%

- Handbrake: +33.20%

- CS:GO FPS Bench: +6.14%

- Tom Raider: +7.85%

- Final Fantasy XV: +1.75%

Here are the 3DMark CPU Profile scores

- CPU Profile 1 Thread: +23.07%

- CPU Profile 2 Threads: +23.51%

- CPU Profile 4 Threads: +29.23%

- CPU Profile 8 Threads: +37.68%

- CPU Profile 16 Threads: +40.99%

- CPU Profile Max Threads: +37.96%

While it’s not as easy to configure a dynamic overclock on Sapphire Rapids compared to its mainstream desktop counterparts, we see the usual improvements compared to a fixed, static overclock. Across the board, we get about 3% better performance with our dynamic overclock. We get the highest performance improvement of +43.02% in Y-Cruncher.

When running Prime 95 Small FFTs with AVX2 enabled, the average CPU effective clock is 4737 MHz with 1.223 volts. The average CPU temperature is 97.0 degrees Celsius. The ambient and water temperature is 27.8 and 36.4 degrees Celsius. The average CPU package power is 602.3 watts.

When running Prime 95 Small FFTs with AVX disabled, the average CPU effective clock is 4837 MHz with 1.223 volts. The average CPU temperature is 79.0 degrees Celsius. The ambient and water temperature is 27.3 and 36.7 degrees Celsius. The average CPU package power is 496.6 watts.

Intel Xeon w5-3435X: Conclusion

All right, let us wrap this up.

As I said in my previous Sapphire Rapids video: I love that Intel offers no less than eight overclockable Sapphire Rapids SKUs. When I first heard about overclockable Xeons, I figured they’d have a single halo SKU like with the W-3175X. Having eight is just fantastic.

The Xeon w5-3435X I overclocked in this video is the first multi-tile CPU I’ve tried. While I’d love to say that the overclocking experience is new, it’s similar to the monolithic Sapphire Rapids CPU I overclocked before. I think the fact that the overclocking experience translates so well from one packaging technology to the next speaks volumes about the maturity of Intel’s overclocking program.

Lastly, there’s still lots to uncover about Sapphire Rapids overclocking. I will try to cover some topics more in-depth in future videos.

Anyway, that’s all for today! I want to thank my Patreon supporters for supporting my work. As usual, if you have any questions or comments, please drop them in the comment section below.

See you next time!

Petros

Hi Pieter,

Some 2 years later I find this article very useful, thank you very much for this! I have this motherboard and will probably obtain the 3435X

Some questions that I have:

1) in your scenario 4, it is obvious that I cannot just blindly copy the per-core values from your overclock, I have to define “best” and “worst” cores for my specific CPU. Can you please elaborate (or probably pint me at the article) how you defined your best and worst cores? Any particular approach/recommendation? If I interpret your fig.51 corectly, ITBM3 regards C0, C2, C12 and C14 as “best”, but you set only C2 to run at 52x, only C0 to run at 51x and c14 is good only for 49x?

2) The same goes to voltages – how did you arrive at 1.215v and 1.25v? if I do the same dynamic overclocking, do I have to define voltages that are good for my specific CPU, or I can just re-use yours?

3) Judging your BIOS settings, setting “Turbo ratio limit” to 50 takes precedence over specific core ratio limits, is this correct? On fig. 55 I can see “Max Core effective clocks” being 4999MHz. Or is it just because faster cores work at 51x and 52x and so they just even out slower cores so that the average is ~5GHz?

4) Are there any negative consequences or side effects if I run those core voltages and particularly elevated to 2.3v 1.8 VIN voltage longterm? As it set it at 2.3v and leave it at that for years to come?

5) In case of dynamic overclocking – do you happen to know or remember what voltages and power are like when system is idle? Does it drop voltages and frequencies to idle levels or keeps them elevated? Fig 55 shows minimum 487W and 4800MHz, but I dont think the system idles at those values, does it? Default values for idle are ~80W and 800MHz I believe.

6) Would you recommend to unconditionally set mesh freq. at 2700MHz and leave it at that? No ill side-effects?

7) Have you taken a look and refreshed SPR-W like w5-3535x? If yes, did you notice any improvements compared to 34xx? If no, do you intend to look at it?

Thank you once again for this great article!

Pieter

Hi,

It’s been a while since I touched this platform so my knowledge on it is a bit rusty. But I’ll try to answer to the best of my abilities.

1) I covered the Sapphire Rapids tuning process in greater detail in the w7-3465X guide. The process is essentially using CoreCycler to find which cores can pass at a certain voltage. Unfortunately, it’s not as simple as relying on the ITBM3 favored cores to identify the best cores. https://skatterbencher.com/2023/09/02/skatterbencher-63-intel-xeon-w7-3465x-overclocked-to-5100-mhz/#Xeon_w7-3465X_Simple_Dynamic_OC_Manual_Tuning

2) Selecting the voltage is usually a matter of checking the operating temperature under load. If you look at OC Strategy #1, you can see we’re hitting TjMax with about 1.226V in the all-core workload, so selecting a voltage around this makes sense. I explain it in more detail here: https://skatterbencher.com/2023/05/20/skatterbencher-61-intel-xeon-w5-3435x-overclocked-to-5200-mhz/#Xeon_w5-3435X_Simple_Fixed_OC_Manual_Tuning.

3) The rule for the core ratio is that it sets the lowest of configured ratios. So if the Core Ratio Limit is 51X and the Turbo Ratio is 50X, then the core will run at 50X.

4) I don’t think there’s out of the ordinary risk associated with the higher VIN voltage. That said, obviously everything that’s out of spec may affect lifespan.

5) Can’t recall those details. Usually I don’t check idle power consumption and voltages. I only report under load. However, I do recall Sapphire Rapids power consumption at idle being quite high. Like, above 100W.

6) If I recall correctly, 2.7 GHz is “set and forget” for the Mesh. I don’t recall any major issues with it.

7) I didn’t look at Sapphire Rapids Refresh, unfortunately. So I don’t have any hands on experience to share. I don’t plan to look at them since Granite Rapids is right around the corner.

Hope this helps!

Pieter.

Petros

It certainly does help. Thank you for the prompt reply!

Kevin

Hi, thanks for the info!

Since skatterbench mentioned per core voltage adjustment, I wonder if this is available for raptor lake cpus?

I cannot find per core adjustment in my z690 motherboard. But I do hope this feature works for my platform. My 6th e-core has the worst bin of all cores and requires 20mv-30mv more to be prime 95 small fft stable. If I could manually tune voltage

for each e-core cluster, I could undervolt other cores more while leave the second e-core cluster undervolt less.

Pieter

Hi Kevin,

Great question! The Per Core Voltage tool has been on Intel mainstream desktop since 12th gen Alder Lake, so also on Raptor Lake. However, the implementation is not the same as on Sapphire Rapids.

Raptor Lake CPUs have a single external VccIA external voltage rail powering every P-core, E-core cluster, and Ring. That means these parts always share the same voltage. Sapphire Rapids, however, has an integrated voltage regulator that provides the voltage for each CPU P-core separately.

What that means for Raptor Lake is that, while you can configure an adaptive per-core voltage, the effect will not be that you can increase the voltage for a specific core. Instead, adjusting the per-core voltage may adjust the voltage for all parts powered by the VccIA rail.

I’ll try to illustrate with an example how it works. Let’s say we have 8 P-cores which have a V/F curve defining 50X at 1.1V and 60X at 1.45V for each P-core. Next, we overclock the CPUs to 62X and set the adaptive per-core voltage to 1.50V for each core, except Core 0. We set Core 0 adaptive voltage to 1.55V (because it can’t overclock that well). Now, the frequency and voltage is as follows:

– When all P-cores are active: 50X at 1.1V, 60X at 1.45V, 62X at 1.55V

– When only P-core 0 is active: 50X at 1.1V, 60X at 1.45V, 62X at 1.55V

– When P-core 1-7, but not P-core 0, is active: 50X at 1.1V, 60X at 1.45V, 62X at 1.50V

Whenever P-core 0 is active and the target ratio is 62X, the voltage for every other active P-core will be 1.55V because that’s what we set the P-core 0 per-core adaptive voltage to. After all, the P-cores share the same VccIA voltage rail and the voltage for that rail is the highest requested among all parts that share that rail (P-cores, E-cores, Ring).

Hope this helps!