SkatterBencher #40: NVIDIA GeForce GT 1030 Overclocked to 2050 MHz

We overclock the NVIDIA GeForce GT 1030 up to 2050 MHz with the ElmorLabs EVC2SX and EK-Thermosphere liquid cooling.

NVIDIA GeForce GT 1030: Introduction

The NVIDIA GeForce GT 1030 is the second slowest graphics card in NVIDIA’s Pascal lineup. NVIDIA released the GPU on May 17, 2017, about one year after the release of its bigger brother, the GTX 1080.

The GeForce GT 1030 utilizes the GP108 graphics core produced using Samsung’s 14nm FinFET process. The graphics core houses 384 CUDA cores and comes with a base clock of 1228 MHz and a boost clock of 1468 MHz. There is both a DDR4 and GDDR5 variant. We’re using a GDDR5 model in this guide, and the memory clocks in at 1500 MHz.

The maximum GPU temperature is 97 degrees Celsius, and the graphics card power is limited to 30W. The latter will become very relevant when we’re getting to the overclocking

In today’s video, we tackle overclocking the GeForce GT 1030. We will cover five overclocking strategies.

- First, increase the GPU and memory frequency using NVIDIA’s OC Scanner

- Second, we manually increase the GPU and memory frequency with the NVIDIA Inspector software tool

- Third, we further increase the GPU and memory frequency with the ASUS’ GPU Tweak III software tool

- Fourth, we use the ElmorLabs EVC2SX to work around some of the critical overclocking limitations

- Lastly, we slap on an EK-Thermosphere water block to get the most performance out of our GPU

Before we jump into the overclocking, let us quickly go over the hardware and benchmarks we use in this video.

NVIDIA GeForce GT 1030: Platform Overview

Along with the ASUS Phoenix GeForce GT 1030 2GB DDR5 graphics card, in this guide, we use an Intel Core i9-12900K processor, an ASUS ROG Maximus Z690 Extreme motherboard, a pair of generic 16GB DDR5 Micron memory sticks, an 512GB Aorus M.2 NVMe SSD, an Antec HCP 1000W Platinum power supply, a Noctua NH-L9i-17xx chromax.black heatsink, the ElmorLabs Easy Fan Controller, the ElmorLabs EVC2SX, EK-Pro QDC Kit P360 water cooling, and the EK-Thermosphere water block. All this is mounted on top of our favorite Open Benchtable V2.

The cost of the components should be around $3,714.

- ASUS Phoenix GeForce GT 1030 2GB DDR5 graphics card: $190

- Intel Core i9-12900K processor: $610

- ASUS ROG Maximus Z690 Extreme motherboard: $1,100

- 32B DDR5-4800 Micron memory: $250

- AORUS RGB NVMe M.2 512GB SSD: $90

- Antec HCP 1000W Platinum power supply: $200

- Noctua NH-L9i-17xx chromax.black heatsink: $55

- ElmorLabs Easy Fan Controller: $20

- ElmorLabs EVC2SX: $32

- EK-Pro QDC Kit P360: $893

- EK-Thermosphere: $74

- Open Benchtable V2: $200

The component selection doesn’t quite match the GT 1030 segment. This video’s primary purpose is to show how to overclock a GT 1030, not whether it makes sense from a value for money perspective.

NVIDIA GeForce GT 1030: Benchmark Software

We use Windows 11 and the following benchmark applications to measure performance and ensure system stability.

- Geekbench 5 (CUDA, OpenCL, Vulkan) https://www.geekbench.com/

- Geeks3D FurMark https://geeks3d.com/furmark/

- PhysX FluidMark https://www.ozone3d.net/benchmarks/physx-fluidmark/

- 3DMark Night Raid https://www.3dmark.com/

- Unigine Superposition: https://benchmark.unigine.com/superposition

- Spaceship: https://store.steampowered.com/app/1605230/Spaceship__Visual_Effect_Graph_Demo/

- CS:GO FPS Bench https://steamcommunity.com/sharedfiles/filedetails/?id=500334237

- Final Fantasy XV http://benchmark.finalfantasyxv.com/na/

- GPUPI https://matthias.zronek.at/projects/gpupi-a-compute-benchmark

- GPU-Z Render Test https://www.techpowerup.com/gpuz/

As you can see, the benchmarks are pretty different from what we usually use and even slightly different from our integrated graphics overclocking videos.

I wanted to include the AI Benchmark, but it turns out the benchmark does not run very well on graphics cards with less than 4 GB of memory, and I could not make it run.

NVIDIA GeForce GT 1030: Stock Performance

Before starting any overclocking, we must first check the system performance at default settings.

Please note that the ASUS Phoenix GeForce GT 1030 comes with an additional boost over the standard GT 1030 as our ASUS card has a listed boost frequency of 1506 MHz versus the standard 1468 MHz. The boost topic is a little convoluted, but we’ll dig into that in a second.

Here is the benchmark performance at stock:

- Geekbench 5 CUDA: 10,843 points

- Geekbench 5 OpenCL: 11,274 points

- Geekbench 5 Vulkan: 10,560 points

- Furmark 1080P: 1,138 points

- FluidMark 1080P: 2.544 points

- 3DMark Night Raid: 16,336 marks

- Unigine Superposition: 2,566 points

- Spaceship: 21.2 fps

- CS:GO FPS Bench: 96.67 fps

- Final Fantasy XV: 18.96 fps

- GPUPI 1B CUDA: 91.260 seconds

When running Furmark GPU Stress Test, the average GPU clock is 1414 MHz with 0.872 volts, and the GPU Memory clock is 1502 MHz with 1.34 volts. The average GPU temperature is 46.9 degrees Celsius, and the average GPU Hot Spot Temperature is 54.9 degrees Celsius. The average GPU power is 29.547 watts.

When running the GPU-Z Render Test, the maximum GPU Clock is 1747 MHz with 1.075V.

Now let’s jump into the overclocking. But before we do that, let’s have a closer look at the NVIDIA GPU Boost Technology. Understanding how this technology works will be fundamental to understanding how to overclock and improve performance.

Brief History of NVIDIA GPU Boost Technology

NVIDIA has come a long way since its founding in 1993. It released the world’s first GPU in 1999 in the form of the GeForce 256. In 2006, it released its first CUDA-enabled graphics cards in the form of the GeForce 8800 GTX. Nowadays, like with CPUs, the increases in operating frequency play only a minor part in the vast generational improvements in GPU compute performance. But, frequency is the part of the product that interests overclockers.

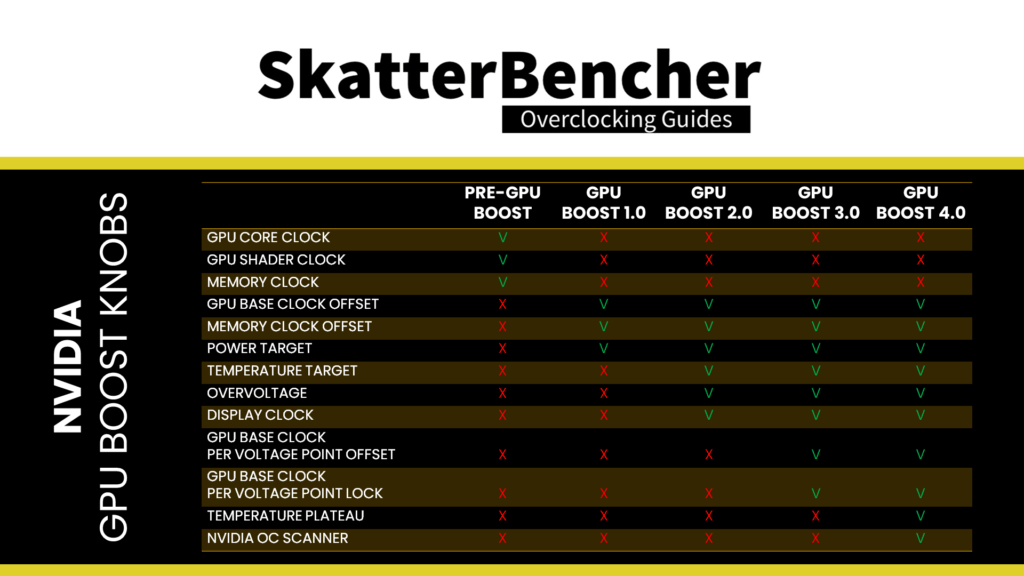

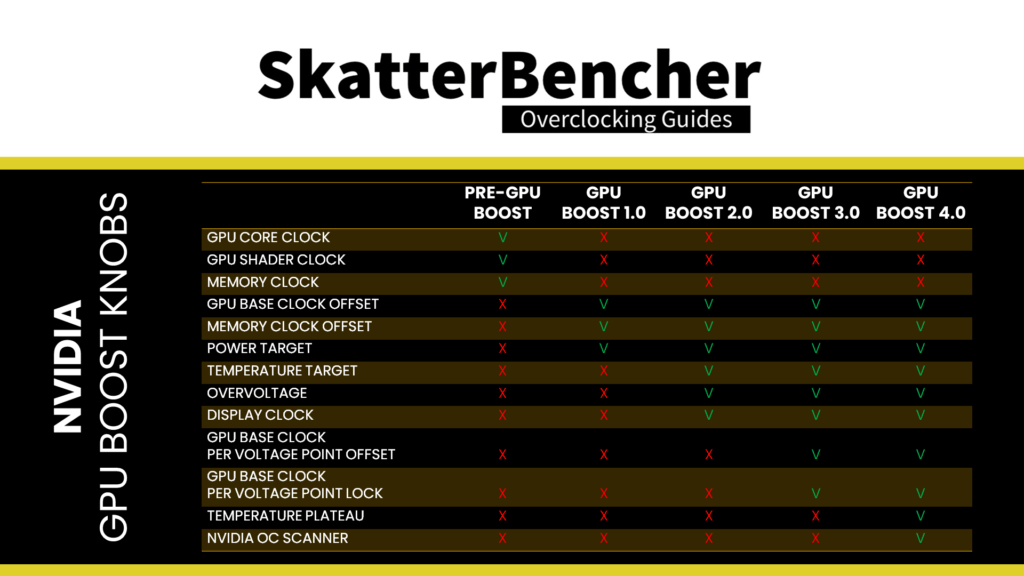

Pre-GPU Boost

NVIDIA’s first GPU in 1999 featured a 220nm chip clocked at 120 MHz and was paired with 166 MHz SDR memory. It was just these two frequencies that overclockers could tune until NVIDIA released the Tesla architecture in 2006. By 2006, NVIDIA’s fastest GPU clocked in at around 650 MHz core frequency paired with 800MHz GDDR3 memory.

The Tesla architecture was NVIDIA’s first microarchitecture to implement unified shaders and came to market in the form of the GeForce 8 series with GeForce 8800 GTX as its flagship product.

GeForce 8’s unified shader architecture consists of many stream processors (SPs). Unlike the vector processing approach taken in the past, each stream processor is scalar and thus can operate only on one component at a time. That makes them less complex to build while still being quite flexible and universal. The lower maximum throughput of these scalar processors is compensated for by efficiency and their ability to run at higher clock speeds. GeForce 8 runs the various parts of its core at differing clock domains. For example, the stream processors of GeForce 8800 GTX operate at a 1.35 GHz clock rate while the rest of the chip is running at 575 MHz.

Fermi succeeded the Tesla microarchitecture and entered the market with its flagship product, the GeForce GTX 480, in 2010. Fermi moves from Stream Processors (SP) to Streaming Multiprocessors (SMs). Within the SMs, different units still operate on different clocks: some operate on the core clock and others on the shader clock. However, the two clocks were hard-linked as the shader clock ran at twice the speed of the core clock. NVIDIA would later do away with the shader clock in Fermi’s successor Kepler and retain just the core clock frequency.

Fermi’s thermal performance, or the lack thereof, became a bit of an internet meme as many users struggled to keep their cards within desired temperature range. Further fueling the fire – pun intended – were hardware reviewers reporting their GeForce GTX 590 graphics cards exploded when attempting minor overclocking tests with increased voltage levels.

While it’s hard to say whether the GeForce GTX 590 PR disaster is a direct cause for NVIDIA locking down overclocking and overvolting, NVIDIA has restricted overclocking ever since Fermi’s successor Kepler. Today, we can only make changes to the GPU Boost Algorithm and are strictly limited in overvolting.

GPU Boost 1.0 – Kepler, 2012

In March 2012, NVIDIA launched their brand new Kepler architecture as the successor of Fermi, which had been serving for two GeForce generations since 2010. The Kepler architecture featured many innovations, including the SMX architecture, Dynamic Parallelism, and Hyper-Q. While these technologies drove the inter-generational performance improvements, another technology would drive the intra-generational performance: NVIDIA GPU Boost.

The design challenge that GPU Boost addresses is a very straightforward one. When drafting the specifications for a new graphics card, traditionally, you would adapt it to the worst-case scenario. Unfortunately, the worst-case scenario is rarely a good indication of a typical workload scenario but is a major limiting factor for voltage, frequency, and ultimately performance. In most typical workloads, you’re not achieving the maximum possible performance.

NVIDIA addresses this design challenge with GPU Boost 1.0 technology for the first time. GPU Boost 1.0 is a GPU clocking technique that converts available power headroom into performance.

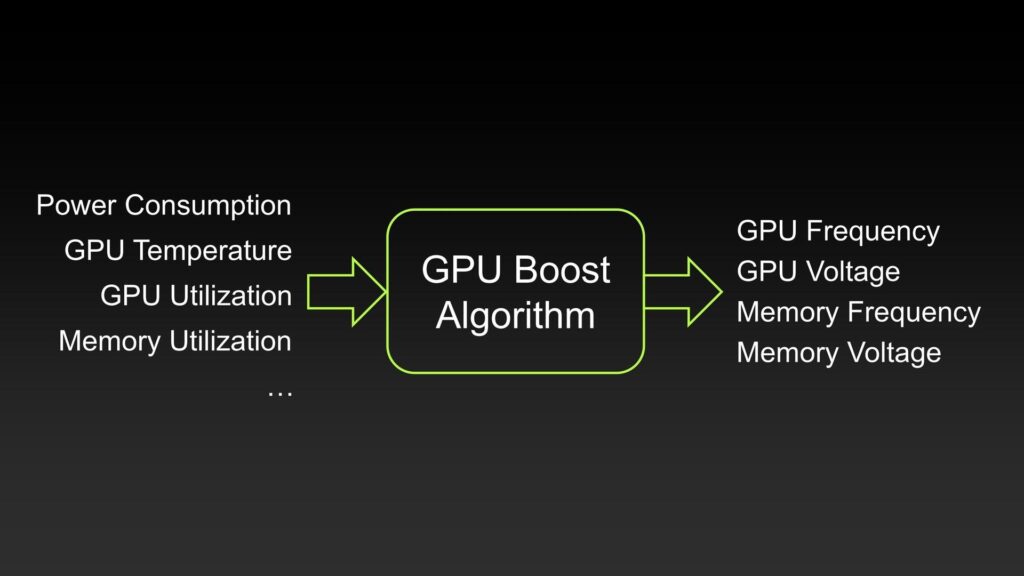

Kepler-based GPUs have a dedicated hardware circuitry that monitors the various aspects of the graphics card, including the power consumption, temperatures, utilization, and so on. The GPU Boost algorithm processes all these inputs to determine the appropriate voltage and frequency headroom. In practical terms, the GPU will dynamically increase the clock frequency when there is sufficient power headroom.

With Kepler and GPU Boost 1.0, NVIDIA also changed how it reports GPU frequencies to the customer. Instead of having a single fixed clock frequency for 3D applications, with GPU Boost 1.0, we have two different clock speeds: the Base Clock Frequency and the Boost Clock Frequency.

- The base clock frequency is the guaranteed minimum GPU frequency during full load workloads. It is equivalent to the traditional GPU clock.

- The boost clock frequency is the GPU’s average clock frequency under load in many typical non-TDP applications that require less GPU power consumption. In other words, the Boost Clock is a typical clock level achieved running a typical game in a typical environment.

The maximum boost clock frequency is … undefined, really. Well, kind of undefined.

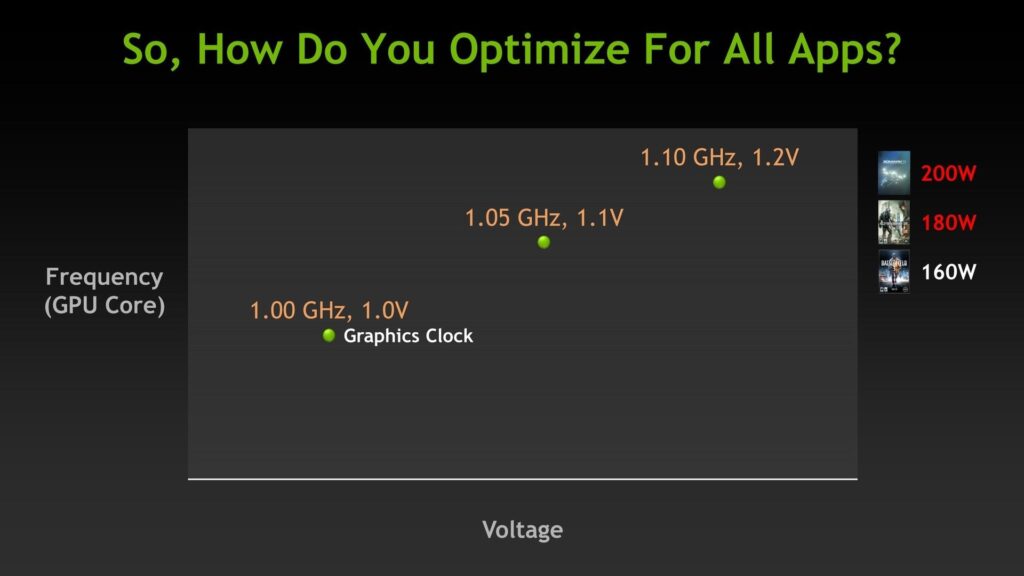

Every GPU comes with a factory-fused voltage-frequency curve with a 12.5mV and 13 MHz granularity. This curve defines the relationship between the operating voltage and operating frequency. Depending on the power headroom, the GPU Boost algorithm will select the appropriate point on the voltage-frequency curve. The advertised Boost Clock Frequency indicates where the algorithm lands in a typical scenario.

According to AnandTech’s tests, while the advertised boost clock frequency of the GeForce GTX 680 is 1058MHz, the GPU has a peak frequency of 1110 MHz.

While the power headroom primarily drives the GPU Boost 1.0 algorithm, the GPU temperature still plays a minor role. AnandTech reports that the GTX 680 only achieves a peak frequency of 1110 MHz if the GPU temperature is below 70 degrees Celsius. When the GPU exceeds this temperature threshold, the GPU backs off to the previous point on the voltage-frequency curve.

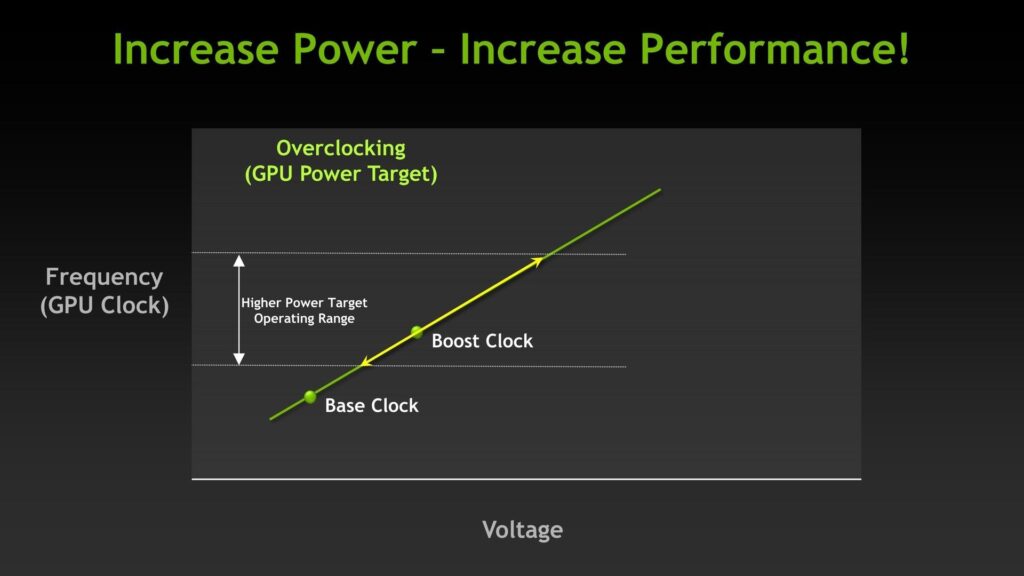

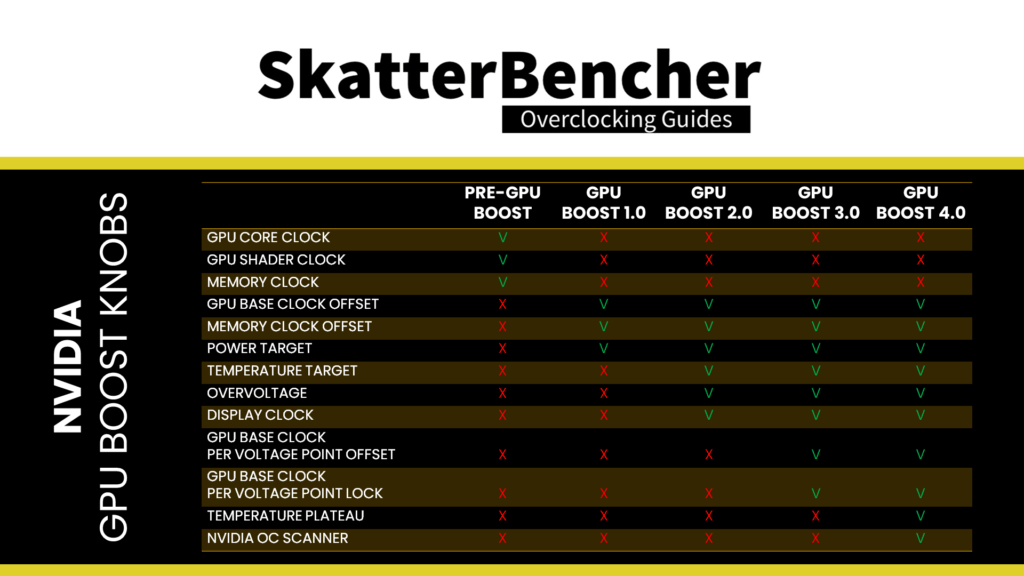

This new approach to GPU clocking also introduces a new approach to overclocking. Rather than setting a fixed voltage and fixed frequency for 3D workloads, overclocking is now all about adjusting the GPU Boost algorithm parameters. GPU Boost 1.0 provides us access to the following overclocking parameters:

- Power target%

- GPU Base Clock Offset

- Memory Clock Offset

The Power Target is a bit of an odd parameter as it is not the same as the TDP. The target isn’t published, but NVIDIA told AnandTech it is 170W for the GeForce GTX 680, below the TDP of 195W. GPU Boost 1.0 allows an increase of up to 32% over the standard Power Target when overclocking the GTX 680. That allows the GPU to boost to higher frequencies even in the most strenuous workloads.

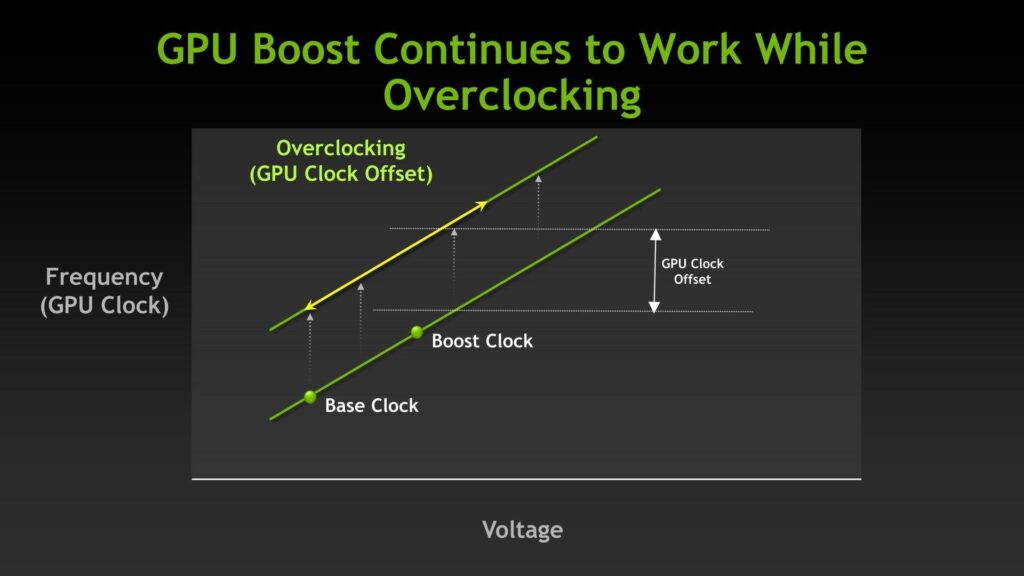

The GPU Base Clock Offset offsets the voltage-frequency curve by a certain amount. If your base frequency is 1000 MHz and your peak frequency is 1100 MHz, with a 200 MHz offset, your new base and peak frequency become 1200 MHz and 1300 MHz. Do note it only changes the frequency of a given V/F Point and not the voltage associated with that V/F Point.

The Memory Clock Offset simply adds an offset to the default memory frequency, similar to how GPU Base Clock Offset works.

If anyone is wondering: voltage control is indeed missing from GPU Boost 1.0. NVIDIA still allows for a dynamic voltage that changes depending on the load; however, the maximum voltage is capped to a Vrel or reliability voltage. That value is determined by NVIDIA and reflects the highest voltage the GPU can safely run at without any impact on lifespan.

GPU Boost 2.0 – Kepler, 2013

On February 19, 2013, NVIDIA introduced the GeForce GTX Titan as part of the GeForce GTX 700 series product line. The GTX Titan sports the full big Kepler GPU and is a significant step up compared to the GeForce GTX 680 in both price and performance. With GeForce GTX Titan, NVIDIA also introduced the GPU Boost 2.0 technology.

GPU Boost 2.0 is a small but significant improvement over the GPU Boost 1.0 technology as it shifts from a predominantly power-based boost algorithm to a predominantly temperature-based boost algorithm. Including temperature in the GPU Boost algorithm makes a lot of sense from performance exploitation and semiconductor reliability perspectives.

First, with GPU Boost 1.0, NVIDIA made conservative assumptions about temperatures. Consequently, the allowed boost voltages were based on worst-case temperature assumptions. So, as you’d get worst-case boost voltages and associated operating frequency, you’d also get worst-case performance.

Second, semiconductor reliability is strongly correlated with operating temperate and operating voltage. For a given frequency, you need a higher operating voltage to maintain reliability at a higher temperature. Alternatively, at a lower operating temperature, you can use a lower voltage and retain reliability for a given frequency.

With GPU Boost 2.0, NVIDIA better maps the relationship between voltage, temperature, and reliability. The algorithm aims to prevent a situation where high voltage is used in combination with a high operating temperature, resulting in lower reliability. That enables NVIDIA to allow for higher operating voltages both at stock and in the form of over-voltage options for enthusiasts.

The result is, according to NVIDIA, that GPU Boost 2.0 offers two layers of additional performance improvement over GPU Boost 1.0.

- First, a higher default operating voltage (Vrel) enables higher out-of-the-box frequencies at lower temperatures.

- Second, by allowing their ecosystem partners to extend the voltage range (Vmax) if the end-user is willing to trade in reliability

While it may seem that the over-voltage is a welcome return to manual overclocking, it is essential to note that NVIDIA still determines the voltage range between Vrel and Vmax. Board partners have the option to allow end-users to access this additional range but cannot customize either voltage limit.

To summarize, GPU Boost 2.0 offers the following overclocking parameters:

- Power target

- Temperature target

- Overvoltage

- GPU Clock Offset

- Memory Clock Offset

- Display Clock

The Power Target is simplified from GPU Boost 1.0 as there is now only one power parameter: TDP

The temperature target is introduced with GPU Boost 2.0 and can either be linked to the power target or set independently. The default temperature target is different from the maximum temperature or TjMax, though users can change it. The temperature target determines the boost algorithm and the fan curve. A higher temperature target means you can boost to higher frequencies with lower fan speed.

Overvoltage enables or disables the predefined voltage range between Vrel and Vmax

The GPU Clock and Memory Clock Offset are the same as GPU Boost 1.0.

Oh, right, and GPU Boost 2.0 also offers the ability to overclock the Display Clock, which overclocks your monitor by forcing it to run at a higher refresh rate.

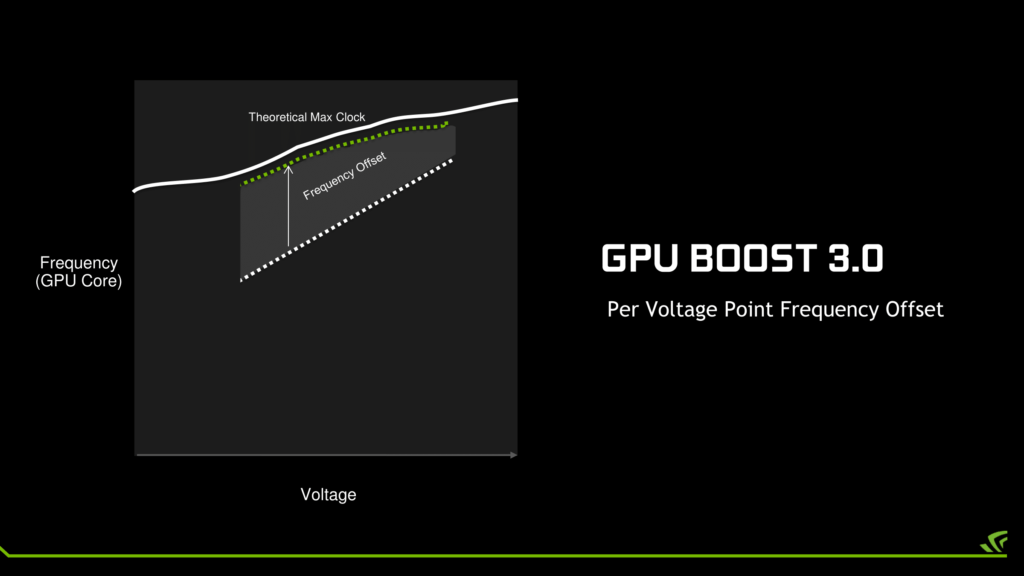

GPU Boost 3.0 – Pascal, 2016

With the release of the Pascal graphics cards on July 20, 2016, NVIDIA introduced GPU Boost 3.0. While advertised as a significant improvement over the previous Boost technology, it looks not much different on the surface. GPU Boost 3.0 introduces three new features:

- Per Voltage Point Frequency Offset

- Voltage Point Lock via NVAPI

- Overvolting expressed as a percentage

The main feature of GPU Boost 3.0 is that NVIDIA exposes the individual points of the GPU voltage-frequency curve to the end-user. That means enthusiasts can finetune every single point on the voltage frequency curve. That allows for greater finetuning and avoids the lost opportunity overclocking range with a fixed offset across the entire voltage-frequency curve. In addition, it also opens up the chance to undervolt the GPU at specific points of the V/F curve.

The obvious downside is that there are a lot of points on the voltage frequency curve. On the low-end GeForce GT 1030, there are no less than eighty (80!) individually adjustable points. NVIDIA also introduced via its NVAPI a setting to lock the GPU at a specific voltage point to assist with this process. So, any overclocking tools could allow you to test each point individually.

The last difference between GPU Boost 2.0 and GPU Boost 3.0 is that the overvoltage option is now represented as a percentage instead of the actual voltage setting. Overvolting is expressed on a percentage scale from 0% to 100%, obfuscating the higher voltage points. That hasn’t changed the underlying behavior of overvolting, meaning it still only provides access to one or more voltage points pre-calibrated by NVIDIA.

GPU Boost 4.0 – Turing, 2018

With the release of the Turing graphics cards on September 20, 2018, NVIDIA also introduced GPU Boost 4.0. The fourth version of the automatic GPU clock boosting algorithm introduced a couple of new features:

- No hard temperature limit

- User editable temperature threshold points

- NVIDIA OC Scanner

On GPU Boost 3.0, if the GPU temperature exceeds a predefined maximum temperature, the GPU would immediately drop from boost clock to base clock. At the base clock, the fan would ramp to reduce the temperature. If the temperature is above the hard threshold again, the boost clock would be active again.

On GPU Boost 4.0, there’s no more hard temperature limit but rather a frequency plateau between the uncapped boost and base clock when the temperature exceeds the threshold. Two temperature points define the plateau

- Point 1: boost clock + temperature/power, where the user prefers the fans to ramp

- Point 2: base clock + temperature/power, where the user prefers the frequency to drop

These two points are user-configurable via the Boost API.

The NVIDIA OC Scanner feature has two parts: the test program and the test workload.

The test program analyzes the factory-fused voltage-frequency curve and determines which points it should test to check for stability quickly. It can lock in a specific point – something already present on GPU Boost 3.0 – and run a workload at that particular point.

NVIDIA explained they go after five specific points on the V/F curve to tune, then interpolate between those points to generate the final V/F curve.

The test workload is a mathematical test designed by NVIDIA to test the stability accurately. According to NVIDIA, what’s unique about their test is that it doesn’t make the GPU crash while stress-testing, whereas third-party tools routinely would.

According to NVIDIA’s Tom Petersen, the OC Scanner would deliver a high-quality, automatically adjusted voltage-frequency curve in roughly 20 minutes.

OC Strategy #1: NVIDIA OC Scanner

In our first overclocking strategy, we use the NVIDIA OC Scanner software to overclock the GeForce GT 1030 graphics card automatically.

NVIDIA OC Scanner

The NVIDIA OC Scanner is part of the GPU Boost 4.0 toolset introduced with the Turing architecture in 2018. OC Scanner helps end-users automatically find the optimal overclocking settings. Using an NVIDIA-designed workload, the OC Scanner focuses on five distinct points on your GPU’s voltage-frequency curve, and stress tests those. After finding the maximum stable frequency for a given voltage at each of the 5 points, the final voltage-frequency curve is interpolated between those points.

While Pascal’s successor Turing introduced GPU Boost 4.0, the ability to set the frequency and voltage of a specific point on the V/F curve and lock the GPU to run at a specific point was already present in Pascal GPUs. So, the OC scanner function was also retroactively made available for Pascal GPUs.

To run the OC Scanner, you can use any third-party tool that supports the function. In our case, we use the ASUS GPU Tweak III software.

Upon opening the ASUS GPU Tweak III Tool

- Click the OC Scanner button

- Click on Advanced Settings

- Set Temperature Target to 97C

- Set Power Target to 100%

- Set Voltage Target to 100%

- Click Save

- Click Start

After the OC Scanner finishes, click Apply to confirm the overclocked settings.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- Geekbench 5 CUDA: +7.43%

- Geekbench 5 OpenCL: +7.03%

- Geekbench 5 Vulkan: +8.80%

- Furmark 1080P: +2.02%

- FluidMark 1080P: +7.08%

- 3DMark Night Raid: +5.91%

- Unigine Superposition: +7.48%

- Spaceship: +8.02%

- CS:GO FPS Bench: +3.33%

- Final Fantasy XV: +8.86%

- GPUPI 1B CUDA: +8.41%

For an automatic overclocking function, the performance increase is entirely free. Overall, it’s quite a decent free performance improvement ranging from 2.02% in Furmark 1080P to 8.86% in Final Fantasy XV.

When running Furmark GPU Stress Test, the average GPU clock is 1535 MHz with 0.853 volts, and the GPU Memory clock is 1502 MHz with 1.34 volts. The average GPU temperature is 49.0 degrees Celsius, and the average GPU Hot Spot Temperature is 57.0 degrees Celsius. The average GPU power is 29.289 watts.

When running the GPU-Z Render Test, the maximum GPU Clock is 1924 MHz with 1.063V.

OC Strategy #2: NVIDIA Inspector

In our second overclocking strategy, we use the NVIDIA Inspector software to overclock the GeForce GT 1030 graphics card manually.

NVIDIA Inspector

NVIDIA Inspector is a lightweight NVIDIA-only overclocking application developed by Orbmu2k. It relies entirely on the NVIDIA driver API and is my go-to tool for quick initial overclocking testing.

The Inspector tool allows us to change parameters such as:

- Base Clock Offset

- Memory Clock Offset

- Power Target

- Temperature Target

- Voltage offset

The overclocking process is relatively simple:

- First, increase the power and temperature target

- Then, increase the base clock and memory clock offsets in increments of 25 or 50 MHz

- Then, run a workload of choice to check the stability.

In my case, I prefer to run the FurMark workload as a worst-case scenario stress test. However, it does not catch all instabilities, especially at the high end of the voltage-frequency curve. So, I also ensure that the settings can pass each of the benchmarks I use in this guide.

I managed to push the Base Clock Offset by 260 MHz, increasing the maximum boost frequency to 2012 MHz in ultra-light workloads. Unfortunately, our graphics card memory overclocks so well that we run out of memory clock offset slider. I set a +1000 MHz offset and increased the memory frequency to 2002 MHz.

Before we move on to the performance results, I want to highlight two crucial aspects of GeForce GT 1030 overclocking: the impact of memory overclocking on performance and GPU frequency.

Memory Overclocking & Benchmark Performance

The performance improvements from overclocking both the GPU and Memory will be evident in a couple of minutes, but it’s essential to understand what drives the performance gains. If we isolate the performance improvement in, for example, FurMark, we find that the performance scaling when GPU overclocking is very poor compared to when overclocking the memory.

- A 10% increase in GPU frequency yields a performance improvement of about 2%. In contrast, an increase of 10% in memory frequency delivers a performance improvement of 5.6%.

- The GPU performance scaling with overclocking does improve when we already have the memory overclocked.

- After increasing the memory frequency by 33% or 500 MHz, a 10% increase in GPU frequency now yields a performance improvement of 5.4%. That’s double compared to when the memory is at the default frequency.

The critical takeaway is that for the GeForce GT 1030, the memory frequency is a much more important driver of performance. In addition, the memory overclocking headroom is also much larger than the GPU overclocking headroom. Combining the higher overclocking headroom and better performance scaling makes it evident that memory should be the primary focus when overclocking the GeForce GT 1030.

Memory Overclocking & FurMark Frequency

While overclocking the memory offers many benefits in unlocking the performance potential of the GeForce GT 1030, there’s also a significant drawback. In FurMark specifically, but likely in all heavy workloads, increasing the memory frequency also lowers the GPU frequency.

- When we keep the GPU frequency at default, with a standard memory frequency of 1500 MHz, the GPU runs at 1442 MHz in Furmark. However, when increasing the memory frequency to 2100 MHz, the GPU frequency is only 1316 MHz. That’s a reduction of 126 MHz.

- The frequency reduction is more apparent when we overclock the GPU with a base clock offset. When we increase the GPU base clock offset by 250 MHz and maintain the standard memory frequency, the GPU runs at 1620 MHz in FurMark. However, when we again increase the memory frequency to 2100 MHz, the GPU runs at only 1430 MHz in FurMark. That’s a reduction of 190 MHz.

- Furthermore, the GPU frequency continues to reduce when we increase the memory frequency. At our maximum memory frequency of 2300 MHz, the FurMark GPU frequency is only 1404 MHz.

I couldn’t isolate why this is happening, but one hypothesis is that the increased memory frequency also increases the load on the GPU’s memory controller. As a result, the GPU Boost algorithm limiters would trigger earlier, causing an earlier decrease of allowed voltage and associated operating frequency.

I will get back to this topic in OC Strategy #4, so let’s just move on to showing how to configure NVIDIA Inspector and the benchmark results.

Upon opening the NVIDIA Inspector Tool

- Click the Show Overclocking button and press Yes

- Select Performance Level P0

- Set Base Clock Offset to +260 MHz

- Set Memory Clock Offset to +1000 MHz

- Set Power Target to 100%

- Select Prioritize Temperature

- Set Temperature Target to 97 degrees Celsius

Then click Apply Clocks & Voltage to confirm the overclocked settings.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- Geekbench 5 CUDA: +15.49%

- Geekbench 5 OpenCL: +15.74%

- Geekbench 5 Vulkan: +18.82%

- Furmark 1080P: +18.45%

- FluidMark 1080P: +11.48%

- 3DMark Night Raid: +15.81%

- Unigine Superposition: +18.00%

- Spaceship: +14.15%

- CS:GO FPS Bench: +17.80%

- Final Fantasy XV: +15.82%

- GPUPI 1B CUDA: +11.68%

The performance improvement is visible across the board, with a peak improvement of +18.82% in Geekbench 5 Vulkan.

When running Furmark GPU Stress Test, the average GPU clock is 1421 MHz with 0.785 volts, and the GPU Memory clock is 2002 MHz with 1.34V. The average GPU temperature is 46.0 degrees Celsius, and the average GPU Hot Spot Temperature is 54.0 degrees Celsius. The average GPU power is 28.375 watts.

When running the GPU-Z Render Test, the maximum GPU Clock is 2012 MHz with 1.075V.

OC Strategy #3: ASUS GPU Tweak III

In our third overclocking strategy, we use the ASUS GPU Tweak III software to overclock the GeForce GT 1030 graphics card further.

ASUS GPU Tweak III

ASUS GPU Tweak III enables control over 3D graphics performance and monitoring. The third installment of the GPU Tweak software, available since January 2021, was built from the ground up. It features a fresh user interface design, automatic and manual overclocking features, fan control, profile connect, hardware monitoring, on-screen display, and a couple of integrated software tools like GPU-Z and FurMark.

Most relevant for our overclocking journey is access to the GPU Boost knobs and dials, including Power Target, GPU Overvoltage, GPU Temperature Target, the OC Scanner, and VF Curve Tuner.

However, GPU Tweak III is so valuable because it provides an option to extend the overclocking range of the GPU and memory frequency beyond the standard NVIDIA limitations.

Whereas NVIDIA Inspector limits us to +1000 offset on the memory, with GPU Tweak III, we can increase this to +1602 offset. As the GT 1030 is extremely sensitive to memory frequency in terms of performance, this additional headroom helps a lot, especially since the memory could be further overclocked from 2000 MHz to 2150 MHz.

Upon opening the ASUS GPU Tweak III tool

- Open the settings menu

- Select Enhance overclocking range

- Click Save

- Leave the settings menu

- Set Power Target to 100%

- Set GPU Voltage to 100%

- Set GPU Boost Clock to 1766 (+260)

- Set Memory Clock to 8600 (+2592)

- Set GPU Temp Target to 97C

Then click Apply to confirm the overclocked settings.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- Geekbench 5 CUDA: +20.17%

- Geekbench 5 OpenCL: +18.73%

- Geekbench 5 Vulkan: +21.14%

- Furmark 1080P: +21.97%

- FluidMark 1080P: +11.12%

- 3DMark Night Raid: +20.87%

- Unigine Superposition: +23.11%

- Spaceship: +18.87%

- CS:GO FPS Bench: +27.29%

- Final Fantasy XV: +25.74%

- GPUPI 1B CUDA: +11.95%

Further unleashing the memory frequency certainly greatly impacts the performance improvement. Especially considering we’re still using the stock air cooler. We see a peak performance increase of +25.74% in Final Fantasy XV.

When running Furmark GPU Stress Test, the average GPU clock is 1418 MHz with 0.782 volts, and the GPU Memory clock is 2151 MHz with 1.34 volts. The average GPU temperature is 46.6 degrees Celsius, and the average GPU Hot Spot Temperature is 54.4 degrees Celsius. The average GPU power is 28.190 watts.

When running the GPU-Z Render Test, the maximum GPU Clock is 2037.5 MHz with 1.113V.

OC Strategy #4: ElmorLabs EVC2 & Hardware Modifications

In our fourth overclocking strategy, we call upon the help of Elmor and his ElmorLabs EVC2SX device. We resort to hardware modifications to work around the power and voltage limitations of the GT 1030 graphics card.

I will elaborate on two distinct topics:

- Why do we need and use hardware modifications?

- How we use the ElmorLabs EVC2SX for the hardware modifications?

If we return to the results from our previous overclocking strategy, we find that two elements are limiting the overclocking potential of the GT 1030 GPU: power and voltage. Let’s look at them a little closer

Situation Analysis: Power Limitation

As we know from the GPU Boost 3.0 technology, power consumption is one of the main limiters of the boosting algorithm, as the algorithm reduces the operating voltage if the power consumption exceeds the TDP threshold.

On modern high-end NVIDIA graphics cards, a separate IC reports the 12V input power to the GPU. It does this by measuring the input voltage and voltage drop over a shunt resistor. The GPU Boost 3.0 algorithm may restrict the GPU from boosting to higher frequencies based on this power reporting.

One way of working around this limitation is by so-called shunt-modding. By shunt-modding, we decrease the resistance of the circuit reporting the power consumption to the GPU, effectively forcing it to under-report. Since the GPU is unaware the power consumption is under-reported, it may boost to higher voltages and frequencies.

A second way to work around the power limitation would be to adjust the power limits in the GPU VBIOS. In the past, enthusiasts would be able to reverse engineer the BIOS files, modify the power limits, then flash the graphics card with a new VBIOS. Unfortunately, since the Maxwell generation of NVIDIA graphics cards, it’s been nearly impossible for enthusiasts to flash a custom VBIOS to their graphics card. So, unless an AIB partner provides you with a modified VBIOS, this is no longer an option.

A third way to work around this is by having a highly efficient VRM. An efficient VRM will require less input power for a given GPU power and thus allow for slightly more GPU power within the restricted input power.

Note that on high-end AMD graphics cards, the VRM reports the output current directly to the GPU, and thus there is no opportunity for shunt-modding.

On low-end graphics cards like the GeForce GT 1030, the VRM design is cheaper and less feature-rich. Crucial is that no separate IC reports the input power to the GPU. So how does the GT 1030 evaluate the current power consumption used in the Boost algorithm?

We don’t know for sure, but one theory is that the GPU has an internal mechanism to estimate power consumption. The process is not that complicated if we know the following equation:

- The GPU can estimate the voltage based on its voltage-frequency curve as it knows which voltage it’s requesting from the voltage controller.

- The GPU can also estimate the current based on GPU load, GPU frequency, memory frequency, and memory controller load. Essentially, it can evaluate how many transistors are active at a given time.

Together, this will give an estimate of power consumption.

Now that we have a better understanding of why the power limitation is relevant and how the GPU evaluates the current power consumption, we can start figuring out how to work around this issue:

- Modifying the VBIOS and overriding the power limits is not possible due to strict limitations from NVIDIA

- Manipulating the current estimate is not possible as the GPU or GPU driver takes care of that

- Manipulating the voltage IS a possibility as the GPU uses the requested VID in the power evaluation, not the actual voltage, as the voltage controller does not report this to the GPU

With our hardware modification, we’ll try to manually increase the voltage at a very low point on the V/F Curve. The GPU will think we’re running at a low voltage because it’s requesting a low voltage to the VRM controller, but the actual voltage output will be much higher to support a higher overclock.

Situation Analysis: Voltage Limitation

I also want to mention that there’s also a voltage limitation with GPU Boost 3.0. NVIDIA calls this voltage the Vrel or reliability voltage. NVIDIA believes that is the maximum voltage to safely use for an extended time (within warranty term).

Since GPU Boost 2.0, NVIDIA also provides their board partners with the ability to allow overvolting. Overvolting, in this case, just means enabling an extended voltage range between Vrel, the reliability voltage, and Vmax, the maximum voltage. Overvolting beyond the Vmax is not allowed.

We can see how this works by looking back at our previous overclocking strategies.

- In OC Strategy #2, we didn’t enable overvolting. So, the highest reported voltage is Vrel, which in our case was 1.075V

- In OC Strategy #3, we did enable overvolting. So, the highest reported voltage is Vmax, which in our case is 1.113V

- However, when we look at the factory-fused voltage-frequency curve of our GT 1030, we see that the available voltage goes all the way up to 1.24375V

If we want to use a voltage higher than the Vmax of 1.113V, then hardware modifications are the only way.

As I explained in the previous segment, the voltage controller on the low-end graphics cards is usually very feature-poor. In our case, the voltage controller does not report the actual voltage to the GPU. So, we are free to increase the voltage beyond the Vmax with hardware modifications.

Hardware Modifications

Now that we understand the hardware modifications’ need and purpose let’s get started. For this graphics card, I will do two hardware modifications:

- The first modification is for the GPU voltage controller, and this will help address the power and voltage limitations we discussed before

- The second modification is for the memory voltage controller, which hopefully will provide us with additional overclocking headroom for the memory.

First up is the GPU voltage controller.

GPU Voltage Controller – Identification

We identified the uPI Semiconductor uP9024Q as the GPU voltage controller.

Unfortunately, we can’t find the datasheet of the voltage controller with a quick Google Search. However, there are other ways. Sometimes vendors like uPI would re-purpose existing designs with minor modifications or improvements. Since we are not looking for the exact specification but only need the pinout, we can try going to the uPI website and looking for an equivalent part.

Under DC/DC Buck Controller > Multiple Phase Buck Controller we can apply the following filter: Package: WQFN3x3-20 (our chip has 20 pins, and this is the only listed option with 20 pins)

That leaves us with two options: the more advanced uP9529Q and the less advanced uP1666Q. We can now check if either solution matches the chip on our graphics card. Checking this requires a little bit of educated guesswork.

We can open the datasheets of the two controllers and see if the pinout differs. For example, we find that the feedback return (FBRTN) pin location is different. Feedback return should connect directly to ground, and we can quickly check if that’s the case.

Checking eliminates uP9529Q as pin 7 connects to ground on our controller, whereas on the uP9529Q, pin 7 provides the reference voltage input.

That leaves uP1666Q. We can do additional checks to confirm our suspicion further that our chip is similar to this controller. For example, we can check if PHASE1 (pin 20) or PHASE2 (pin 16) connects to the nearby inductor. Additionally, we can verify if PVCC (pin 18) connects to a nearby larger capacitor.

Although there’s no way to know if we have found the correct part, based on these checks, we can take a calculated risk and use the information from the datasheet to do the hardware modification.

GPU Voltage Controller – Modification

As outlined in the Situation Analysis segment, our goal is to increase the voltage output manually.

In the datasheet, we find a Typical Application Circuit where we can trace back Vout to UGATE1 and LGATE1.

The Upper Gate, UGATE, and Lower Gate, LGATE, connect to the high-side and low-side of the MOSFET. To keep it simple, the MOSFET is a transistor controlled by the GATE signal that will switch on and off. In this case, the high-side connects to 12V, and the low-side would be 0V as it’s connected to ground. The MOSFET outputs this 12V and 0V through an output filter which averages out these voltages to provide a smooth output voltage.

For example, the MOSFET switches on the high-side (12V) 10% of the time and the low-side (0V) 90% of the time. The average voltage output of the MOSFET is then 12V x 10% + 0V x 90% = 1.2V.

The voltage controller can control the output voltage by sending more or fewer signals via the upper gate or lower gate. An increase in signals through the UGATE tells the MOSFET to switch on the high-side more often, increasing the output voltage.

In the Functional Block Diagram, we find that the difference between Reference Input voltage (REFIN) and Feedback voltage (FB) drives the Gate Control Logic. Essentially, the voltage controller aims to have reference input equal to the sensed output voltage. If the reference input voltage is higher than the feedback voltage, the voltage controller will try to increase the output voltage. If the reference input voltage is lower than the feedback voltage, then the voltage controller will try to decrease the output voltage.

That offers us two ways to control the output voltage: adjust the REFIN or adjust the FB.

In a typical voltage controller, the REFIN is an internal non-adjustable voltage. The voltage output (Vout) connects to the feedback input pin (FB) with two resistors: one between Vout and FB and one between FB and GND. These two resistors serve as voltage dividers. For example, if the reference input voltage is fixed to 0.8V and the feedback resistors divide the output voltage by 2, the output voltage is 1.6V.

If we double the resistance between Vout and FB, the voltage controller would have a feedback input of 1.6V divided by 4, or 0.4V. The voltage controller will increase the output voltage since the reference input voltage is 0.8V and higher than the feedback input voltage. In this case, the voltage output will be 2.42V.

However, that’s not how it works in this example. As we can see from the Typical Application Circuit, the feedback circuit is used for voltage sense rather than voltage divider. So that leaves the REFIN voltage to control the voltage output.

Under Functional Description, we find a more detailed overview of the Voltage Control Loop. The figure shows that VREF, REFADJ, and VID control REFIN. In short: a Boot voltage is set by resistors R2, R3, R4, and R5 from the 2V reference voltage (Vref). This voltage can dynamically be adjusted by a REFADJ output which is determined, in this case, by the VID. The VID is, of course, controlled by our GPU and its GPU Boost algorithm.

So, what are our options to manually adjust the reference input voltage (REFIN)? Let’s check Ohm’s Law:

Voltage = Current x Resistance

- We replace any of the four aforementioned resistors with a different resistor. Reducing the resistance of R2 or R3 would increase the REFIN, whereas lowering the resistance of R4 or R5 would decrease the REFIN.

- Instead of replacing the resistor, we can add a variable resistor parallel with any of the four mentioned resistors, which will effectively decrease the resistance of that resistor. That is the typical approach for hardware modifications, as we can use a variable resistor to change the voltage on the fly.

- We can inject current at the REFIN point, which increases the voltage to REFIN.

With the ElmorLabs EVC2SX, we go for the third option as we alter the current in the circuit to change the reference input voltage.

In addition, we also remove the resistor connecting the REFADJ and GPU VID from interfering in the circuit. To achieve stability at higher frequencies, we need a very low VID, around 0.7V, to stay below the power limit, but at the same time, we need a voltage higher than Vmax, about 1.2V, to be stable.

In other words, we’d need a voltage offset of around 500mV. If we use this kind of offset, two things will happen:

- The boot voltage may be too high

- There might be sudden spikes of voltage when the GPU Boost algorithm finds there’s enough voltage headroom for the highest V/F Point, which would result in the 500mV offset applied to 1.1V VID, so resulting in 1.6V

By eliminating the VID from the equation, we ensure that there’s one fixed voltage applied.

GPU Voltage Controller – Summary

Let’s summarize everything we talked about so far.

Our goal is to manually control the output voltage of the GPU voltage controller because (a) we want to exceed the Vmax limitation imposed by NVIDIA, and (b) we want to keep the VID as low as possible to avoid triggering the power consumption limiter.

The GPU voltage controller determines the appropriate voltage based on two inputs:

- a reference input voltage (REFIN), and

- a feedback input voltage (FB).

The reference input voltage is based on a boot voltage derived from a 2V reference voltage (VREF) and any dynamic reference adjustment output (REFADJ). The reference adjustment is controlled by the GPU directly by issuing VID requests based on the GPU’s voltage-frequency curve and the GPU Boost algorithm.

The REFIN/FB comparison output serves as input for the gate logic that controls the Upper Gate Driver (UGATE) and Lower Gate Driver (LGATE).

- The UGATE signal controls the high-side MOSFET connected to 12V

- The LGATE signal controls the low-side MOSFET connected to 0V.

The MOSFET is a transistor that switches on and off. The MOSFET output goes through an output filter to provide a stable voltage to the GPU.

If the GPU Boost algorithm finds sufficient headroom to increase the voltage, it increases the VID. This VID request goes via the REFADJ and increases the REFIN. The increased REFIN compared to the unchanged FB will make the voltage controller keep the Upper Gate (UGATE) signal enabled longer. The high-side MOSFET connected to 12V will thus stay enabled for longer, which increases the output filter’s average voltage. That, in turn, increases the output voltage to the GPU.

This process happens a couple hundred thousand times per second.

To achieve our goal, we make the following hardware modifications:

- We remove the resistor between REFIN and REFADJ to prevent the GPU VID from influencing the voltage output

- We add current into the circuit connecting VREF and REFIN. Following Ohm’s law and Kirchhoff’s current law, the increased current and unchanged resistance increase the voltage to REFIN

Memory Voltage Controller

We identified the uPI Semiconductor uP1540P as the memory voltage controller. Fortunately, the datasheet is readily available on the UPI-Semi website: https://www.upi-semi.com/en-article-upi-473-2080.

In the datasheet, we find a Typical Application Circuit and see that we can trace back the voltage output (Vout) to UG and LG/OCS. The Upper Gate Driver Output (UG) and Lower Gate Driver Output (LG) connect to the high-side and low-side MOSFETs. We discussed how that works earlier in this video.

Furthermore, we also see a feedback loop from the voltage output back to the voltage controller via FB.

In the Functional Block Diagram, we find that the Gate Control Logic is driven by comparing Feedback Input Voltage (FB) and Offset Voltage (OFS). The FB pin feeds back the output voltage Vout into the controller. The OFS pin can be used to set a voltage offset by connecting it via a resistor to the 0.8V voltage output REFOUT.

So, what are our options to manually control the memory voltage? Again, let’s check Ohm’s law

Voltage = Current x Resistance

- We can replace the resistor connecting Vout and FB with a higher value. That will result in a lower voltage reported to the Feedback pin, and as a consequence, the voltage controller will try to increase the voltage output.

- Alternatively, we can replace the resistor connecting FB and GN with a lower value. That will also result in a lower voltage reported to the Feedback pin, and as a consequence, the voltage controller will try to increase the voltage output.

- We can source a current on the OFS pin. As a result, the voltage controller will try to increase the output voltage

- We can place a resistor between OFS and REFOUT to control the offset voltage scale

- We can sink a current on the FB pin. That will reduce the voltage on the Feedback pin, and as a consequence, the voltage controller will try to increase the voltage output

With the ElmorLabs EVC2SX, we go for the last option as we alter the current in the circuit to change the feedback input voltage.

ElmorLabs EVC2SX

The ElmorLabs EVC2SX is the latest addition to the EVC2 product line.

The ElmorLabs EVC2SX enables digital or analog voltage control using I2C/SMBus/PMBus. The device also has UART and SPI functionality. It can be considered the foundation for the ElmorLabs ecosystem as you can expand the functionality of some ElmorLabs products by connecting it to an EVC2.

In this case, we’re interested in the 3 VMOD headers that provide digital voltage sense and feedback adjustment capabilities for analog VRM controllers. It works using an onboard current-DAC that sources or sinks current into the feedback pin. For a detailed overview, you can refer to the tutorial on ElmorLabs’ forum. Please note that different versions of the EVC2 are using slightly different configurations for the onboard current-DAC.

I’ll try to keep the step-by-step explanation as practical as possible in this video.

Step 1: identify the voltage controllers you want to control with the EVC2SX.

Step 2: determine how the hardware modification will work

Step 3: find the headers near the VMOD1 marking on the EVC2SX PCB

On the EVC2SX, there are seven pins: 1 for ground (GND) and three sets of 2 pins for a specific controller. The two pins have two purposes: one pin is used for voltage measurement, and the other pin is used for voltage adjustments. Starting from the ground pin on the left, every other pin is for the voltage measurement, and the pins in between are for voltage adjustments.

Step 4: connect the various pins to the relevant points on your graphics card

In my case, I connect the pins from left to right as follows:

- Pin 1: any Ground (GND) of the graphics card

- Pin 2: the current source for the GPU voltage circuit

- Pin 3: Voltage measurement point for GPU voltage

- Pin 4: the current sink for the memory voltage circuit

- Pin 5: Voltage measurement for the memory voltage

Step 5: open the ElmorLabs EVC2 software for voltage monitoring and control

You can find the relevant controls under the DAC1 submenu. Here you will find VIN1, VIN2, and VIN3. If your voltage measurement point is correctly connected, you should immediately see the voltage monitoring.

By using the dropdown menu options, you can adjust the number of microamps sunk or sourced from the connected voltage controller.

As I explained in the previous segments, we are sourcing current on the REFIN pin for the GPU voltage hardware modification. That means we can increase the voltage by using positive values. Conversely, we are sinking current on the FB pin for the memory voltage hardware modification. That means we can increase the voltage by using negative values.

Please be aware that any changes in these dropdowns can cause permanent damage to your hardware, so be very careful when adjusting the voltages. I suggest taking the following precautions:

- Every time to want to adjust the voltage, always start with the smallest step up or down. That allows you to double-check if the voltage monitoring works correctly and confirm the step size of the voltage adjustment.

- Increase the voltage step by step to ensure the voltage increases as you expect – while the EVC2SX provides a great function, it does not offer fine-grain voltage control. So the step size might not always be ideal. In my case, I saw a 100mV step increase in GPU voltage.

Upon opening the Elmorlabs EVC2 software,

- Access the DAC1 section

- Set VIN1 to 40uA

- Set VIN2 to 0uA

- Click Apply

Then open the ASUS GPU Tweak III tool

- Open the settings menu

- Select Enhance overclocking range

- Click Save

- Leave the settings menu

- Set Power Target to 100%

- Set GPU Voltage to 100%

- Enter the VF Tuner feature

- Select all V/F Points (CTRL+A)

- Click Align Points Up (A)

- Click Move Points Up until the highest V/F Point reaches 2034 MHz

- Click Apply

- Click Close to leave the VF Tuner feature

- Set Memory Clock to 8600 (+2592)

- Set GPU Temp Target to 97C

Then click Apply to confirm the overclocked settings.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- Geekbench 5 CUDA: +28.25%

- Geekbench 5 OpenCL: +27.28%

- Geekbench 5 Vulkan: +27.77%

- Furmark 1080P: +38.14%

- FluidMark 1080P: +20.32%

- 3DMark Night Raid: +25.45%

- Unigine Superposition: +30.09%

- Spaceship: +26.89%

- CS:GO FPS Bench: +32.23%

- Final Fantasy XV: +32.70%

- GPUPI 1B CUDA: +18.79%

Working around the main limitations of the GeForce GT 1030 by employing hardware modifications certainly helps further boost the performance gains from overclocking. We see the most significant performance gain in Furmark 1080P with +38.14%.

When running Furmark GPU Stress Test, the average GPU clock is 1778 MHz with 1.2 volts, and the GPU Memory clock is 2157 MHz with 1.2 volts. The average GPU temperature is 78.2 degrees Celsius, and the average GPU Hot Spot Temperature is 86.2 degrees Celsius. The average GPU power is an estimated 47.13 watts.

When running the GPU-Z Render Test, the maximum GPU Clock is 2037.5 MHz with 1.113V.

OC Strategy #5: EK-Thermosphere Water Cooling

In our fifth and final overclocking strategy, we use the EK-Thermosphere and custom loop water cooling to improve our overclock further.

EK-Thermosphere

Launched back in May 2014, the EK-Thermosphere is a high-performance universal GPU water block built on the EK-Supremacy cooling engine. A crucial difference between this universal GPU water block and the modern full-cover water blocks is that the universal water block only cools the GPU. In contrast, the full-cover water blocks often also cool the memory chips and VRM components.

The EK-Thermosphere comes with a pre-installed mounting plate for the G80 GPU, but for the GT 1030, I needed the G92 mounting plate. Unfortunately, the mounting plates are out of stock on the EK web shop, and it’s also nearly impossible to find any mounting plates in retail stores.

Fortunately, the EK team helped provide the 3D models of the mounting plates, and thanks to Frank from G.SKILL, I could have a couple of mounting plates locally produced in Taipei by a company called HonTen Electronic.

Even with the correct mounting plate, installing the Thermosphere was not very straightforward as the GP108 die is not as thick as the G92 die. So I had to fiddle with the installation method to get everything to work correctly.

Cool GPU, Hot VRM

Using custom loop water cooling offers two main advantages:

- It has a substantially higher total cooling capacity than the standard air cooler. In other words, it can handle higher total Watt.

- It also has substantially better thermal transfer capabilities, meaning it can dissipate a higher watt per millimeter squared (W/mm2)

The first point isn’t that important as the GT 1030 consumes barely any power even when heavily overclocked.

The second point is more relevant as clearly, our air cooler cannot transfer the heat sufficiently fast enough from the die to the heatsink fins. Generally, you’d describe this aspect of the thermal solution in terms of Watt per mm2 (W/mm2). A liquid cooling solution with highly optimized thermal transfer can achieve up to around 2W/mm2, though most of the time, a typical liquid cooling system will hover at about 1.5W/mm2. With the GT 1030 water-cooled, we will likely not even come close to 1W/mm2.

With standard air cooling, we can increase the GPU voltage to 1.2V. That results in a FurMark maximum GPU temperature of almost 90 degrees Celsius. We hope that the improved cooling creates so much additional thermal headroom that we can substantially increase the voltage, creating extra frequency headroom. However, we run into two major issues.

- First, the GP108 seems to scale very poorly with voltage and temperature. Despite increasing the voltage from 1.2V to 1.4V and reducing the peak temperature from 87 degrees Celsius to 62 degrees Celcius, we barely get any additional higher frequency

- Second, the GPU VRM struggles to operate sufficiently cool with the increased voltage. It’s so bad that at 1.4V, we measure temperatures of 150C and can smell a slight burning going on.

So, the final result of our water-cooling test is that while our temperatures drop by about 25 degrees Celsius under load, an overheating VRM is critically limiting our voltage headroom. We can increase the voltage to 1.3V, but the GP108 die scales little to nothing with the additional voltage, so we barely achieve an extra 20 MHz. The colder temperatures are helping the memory controller run a little more stable. As a result, we can increase the memory frequency to 2200 MHz.

Upon opening the Elmorlabs EVC2 software,

- Access the DAC1 section

- Set VIN1 to 50uA

- Set VIN2 to -30uA

- Click Apply

Upon opening the ASUS GPU Tweak III tool

- Open the settings menu

- Select Enhance overclocking range

- Click Save

- Leave the settings menu

- Set Power Target to 100%

- Set GPU Voltage to 100%

- Enter the VF Tuner feature

- Select all V/F Points (CTRL+A)

- Click Align Points Up (A)

- Click Move Points Up until the highest V/F Point reaches 2069 MHz

- Click Apply

- Click Close to leave the VF Tuner feature

- Set Memory Clock to 8800 (+2792)

- Set GPU Temp Target to 97C

Then click Apply to confirm the overclocked settings.

We re-ran the benchmarks and checked the performance increase compared to the default operation.

- Geekbench 5 CUDA: +28.76%

- Geekbench 5 OpenCL: +27.47%

- Geekbench 5 Vulkan: +28.81 %

- Furmark 1080P: +38.22%

- FluidMark 1080P: +20.91%

- 3DMark Night Raid: +28.94%

- Unigine Superposition: +33.28%

- Spaceship: +28.77%

- CS:GO FPS Bench: +33.97%

- Final Fantasy XV: +33.39%

- GPUPI 1B CUDA: +18.98%

Adding additional cooling to the GT 1030 positively impacts the temperatures under load. Unfortunately, there’s little improvement in the overclocking capabilities for various reasons. We see the most significant performance gain in Furmark 1080P with +38.22%.

When running Furmark GPU Stress Test, the average GPU clock is 1706 MHz with 1.3 volts, and the GPU Memory clock is 2201 MHz with 1.4 volts. The average GPU temperature is 53.7 degrees Celsius, and the average GPU Hot Spot Temperature is 61.7 degrees Celsius. The average GPU power is an estimated 50.55 watts.

When running the GPU-Z Render Test, the maximum GPU Clock is 2050 MHz with 1.3V.

NVIDIA GeForce GT 1030: Conclusion

Alright, let us wrap this up.

As I stated at the beginning of the video, this project was more about getting back into discrete GPU overclocking than the GPU itself. The GeForce GT 1030 was simply the cheapest graphics card I could find at the time.

I genuinely learned a lot during this project. For example, I learned the NVIDIA GPU Boost technology is pretty good at maxing out your graphics card and providing solid out-of-the-box performance. I also learned more about hardware modifications and how voltage controllers work. Lastly, I realized that liquid cooling isn’t always the magic ingredient for achieving big overclocks. Overall, this was a valuable experience for me.

When it comes to the GeForce GT 1030, the main takeaways are that (1) memory frequency is crucial to improving the performance, (2) unless you’re willing to do hardware modifications, NVIDIA’s limitations will restrict your overclock, and (3) even with water cooling and high voltage, the GPU overclocking capabilities are pretty limited.

Anyway, that’s all for today!

I want to thank my Patreon supporters, Coffeepenbit and Andrew, for supporting my work.

As per usual, if you have any questions or comments, feel free to drop them in the comment section below.

Till the next time!

SkatterBencher #62: NVIDIA GeForce RTX 3050 Overclocked to 2220 MHz - SkatterBencher

[…] of an architecture because they’re cheaper to play with. I previously overclocked the GT 1030 and GTX […]

SkatterBencher #64: Intel Arc A770 Overclocked to 2795 MHz - SkatterBencher

[…] this is not the first time I’m using an EK GPU water block – I used the EK-Thermosphere in SkatterBencher #40 when overclocking the GeForce GT 1030 – this is the first full cover water […]

5 Minute Overclock: NVIDIA GeForce GT 1030 to 2038 MHz - 5 Minute Overclock

[…] I’ll speed run you through the OC settings and provide some notes and tips along the way. Please note that this is for entertainment purposes only and most certainly not the whole picture. Please don’t outright copy these settings and apply them to your system. If you want to learn how to overclock this system, please check out the longer SkatterBencher video. […]

SkatterBencher #41: AMD Radeon RX 6500 XT Overclocked to 3002 MHz - SkatterBencher

[…] my GT 1030 video, my main goal was to get back up to speed on how AMD GPU overclocking works. Unfortunately, the […]

SkatterBencher #42: NVIDIA GeForce GTX 1650 Overclocked to 2205 MHz - SkatterBencher

[…] already have an article up on this blog detailing the history of GPU Boost, so I won’t go into too much detail this time. Instead, I […]