I Broke the GPU Frequency Overclocking World Record

I broke the GPU Frequency Overclocking World Record with Intel Graphics at the Computex 2025 G.SKILL World Record OC Stage.

Introducing the GPU Frequency Overclocking World Record

The first thing we need to get out of the way is how to substantiate the claim of GPU Frequency Overclocking World Record. Unfortunately, there is no single source of truth when it comes to GPU frequency records. However, I’ve been maintaining a database of CPU, GPU, and memory world records on my blog.

I’ve split the database up into four categories: one overall ranking and then one for each type of GPU (discrete, integrated into the CPU, and integrated into the chipset). The data goes back to the late 1990s though there may be a few inaccuracies from the early days since no record-keeping services existed. Feel free to reach out if you can contribute to the historical accuracy of these leaderboards.

The most important rule of my leaderboard is that the frequency must be measured while the GPU is active. However, just like the CPU validations, it doesn’t need to be active for a very long time or with a particularly heavy load.

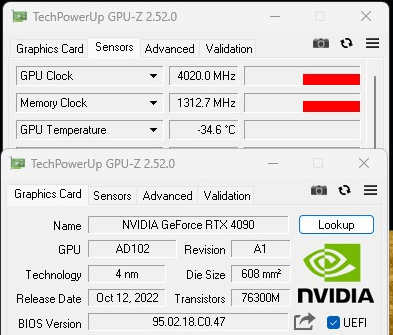

Prior to my record attempt at Computex 2025, the highest achieved GPU frequency was 4020 MHz by Splave with the NVIDIA GeForce RTX 4090. The GPU was stable for at least 825 milliseconds during the GPUPI 1B benchmark.

Overclocking Arrow Lake’s Intel Graphics

I used the Intel Graphics integrated in the Arrow Lake Core Ultra 9 285K for the record attempt. I overclocked the Intel Graphics on two previous occasions: once for my Arrow Lake launch content back in October last year and once more for SkatterBencher #86. If you want to know more about how to overclock the Intel Graphics, check out that last video.

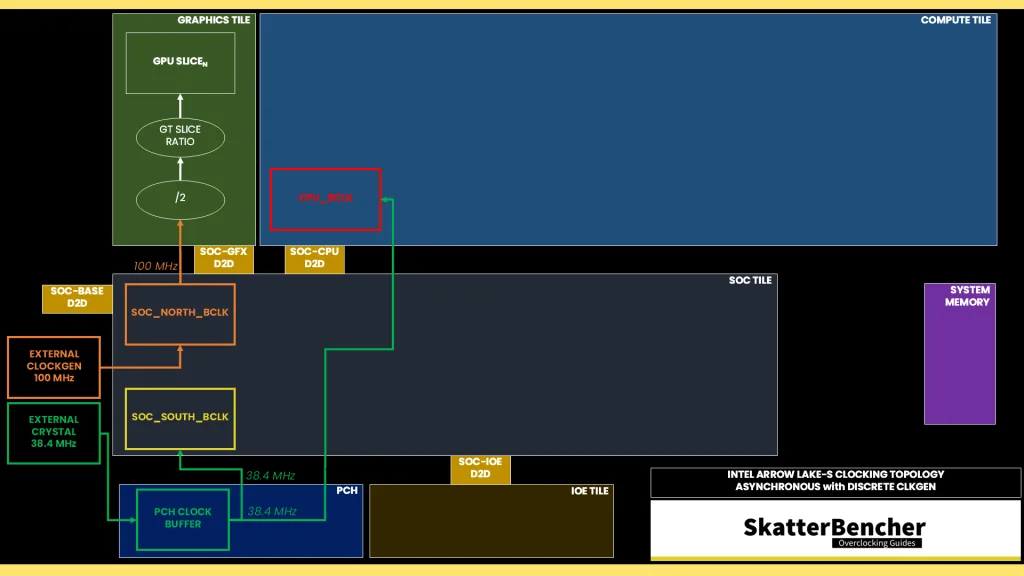

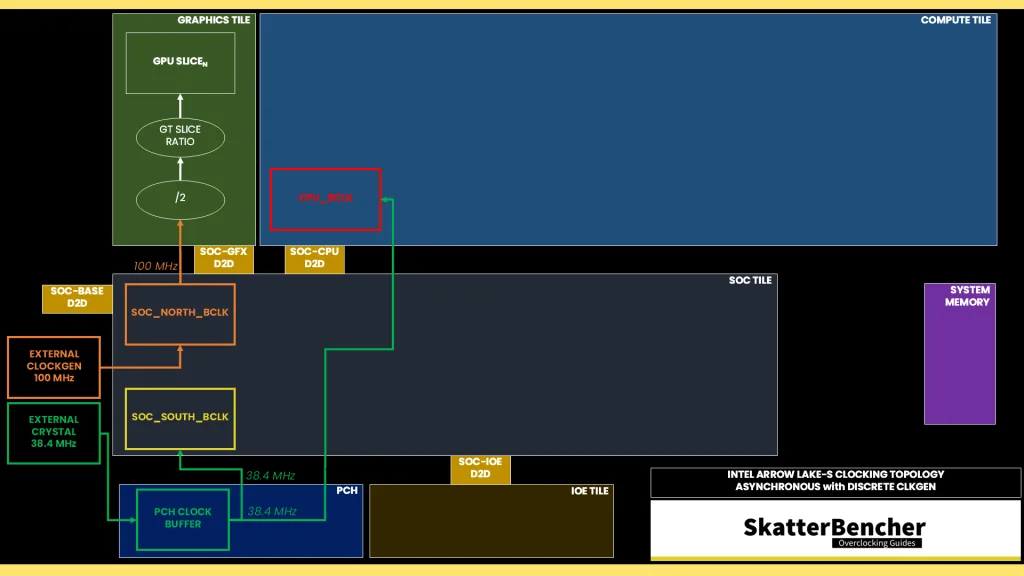

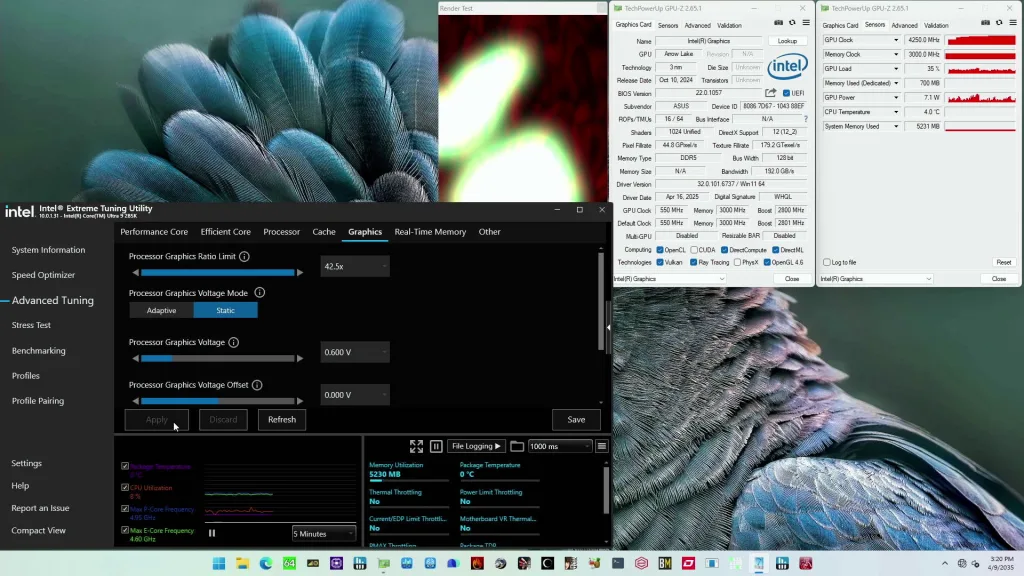

The long story short is that the graphics frequency is derived from the 100 MHz SOC reference clock, which is first halved, and then multiplied with the GT ratio. The GT ratio can be configured up to 85X which means the graphics can run at 4.25 GHz with a default reference clock.

The voltage is provided by a dedicated VccGT voltage rail which powers only the graphics cores in the Graphics tile. The voltage can be configured either in SVID or PMBus mode. The former is the default way, while the latter is preferred for extreme overclocking. That’s because in PMBus mode we have direct control over the output voltage.

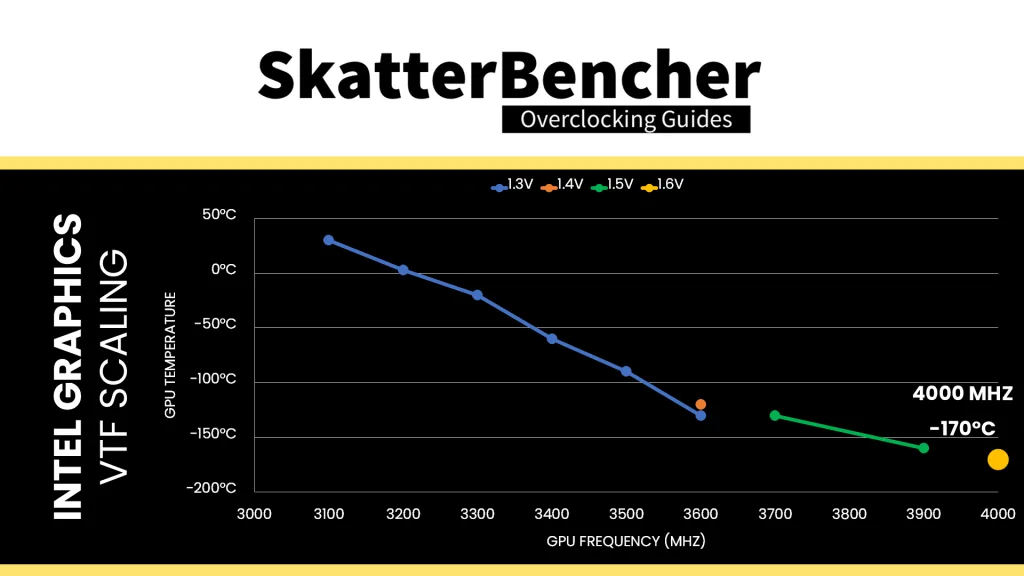

Voltage-Temperature-Frequency Scaling

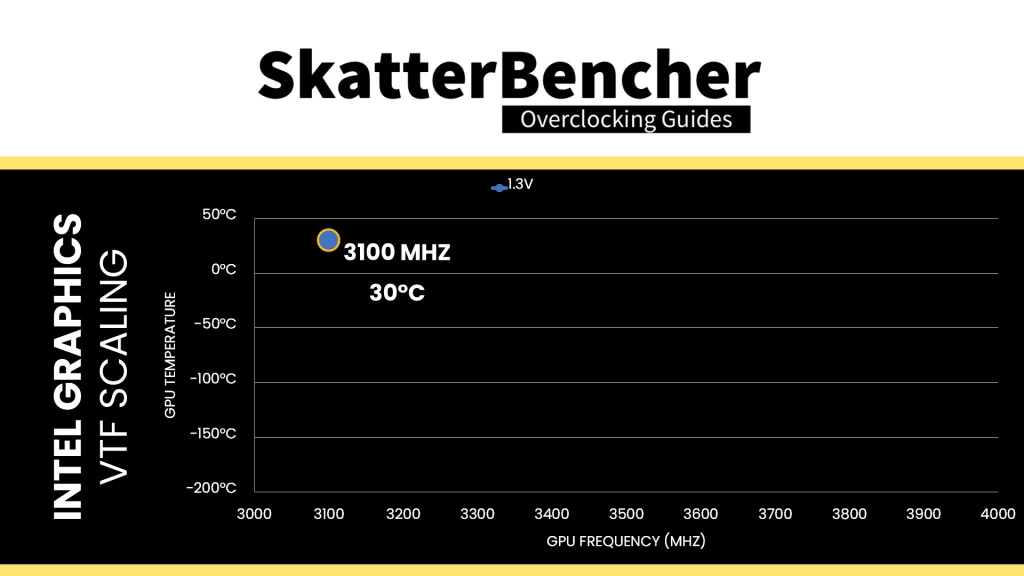

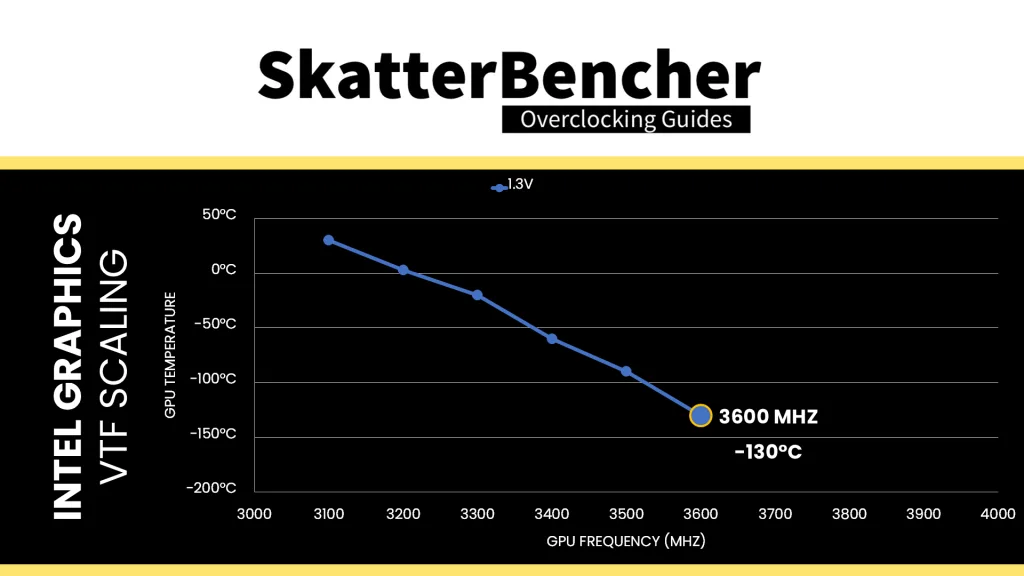

For my SkatterBencher guide I was able to clock up the Intel Graphics to 3100 MHz with 1.3V. Higher voltage didn’t seem to add a lot of overclocking headroom. In the weeks prior to Computex, we spent some time characterizing the behavior with liquid nitrogen.

The integrated graphics scales much better with temperature than with voltage. At 1.3V and -130°C we’re able to achieve 3.6 GHz. The road to 4 GHz is much more challenging, however, as we need 1.6V and -170°C.

Operating Arrow Lake at that low of a temperature isn’t straight-forward but luckily, we’re in good hands with Shamino and the ROG Z890 Apex motherboard.

Achieving the GPU Frequency Overclocking World Record

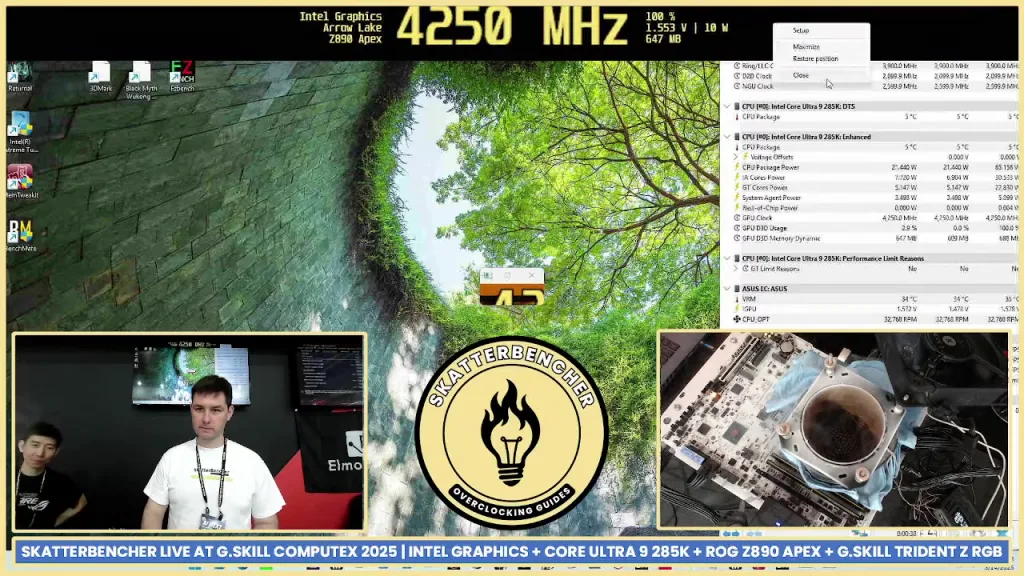

Getting the record was actually quite easy: we even achieved it on May 9th, during our pretesting session prior to Computex. We achieved 4250 MHz running GPU-Z using the highest available graphics ratio of 85X.

At the Compute 2025 G.SKILL OC World Record stage, we repeated this feat during the livestream.

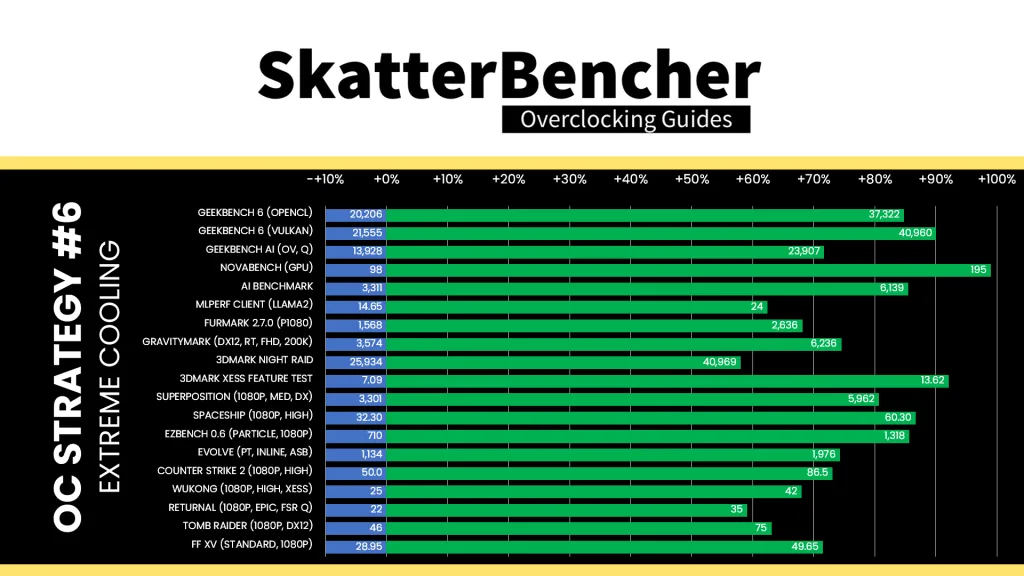

Performance at 3900 MHz

The next objective for the Computex 2025 demo was to run all the benchmarks used in SkatterBencher #86 at an extreme yet stable frequency and with a high-performance memory configuration. 3900 MHz GPU frequency with DDR5-8600 and an optimized memory subsystem was relatively easy to keep stable at 1.6V and -160°Celsius. It passed all our benchmark tests.

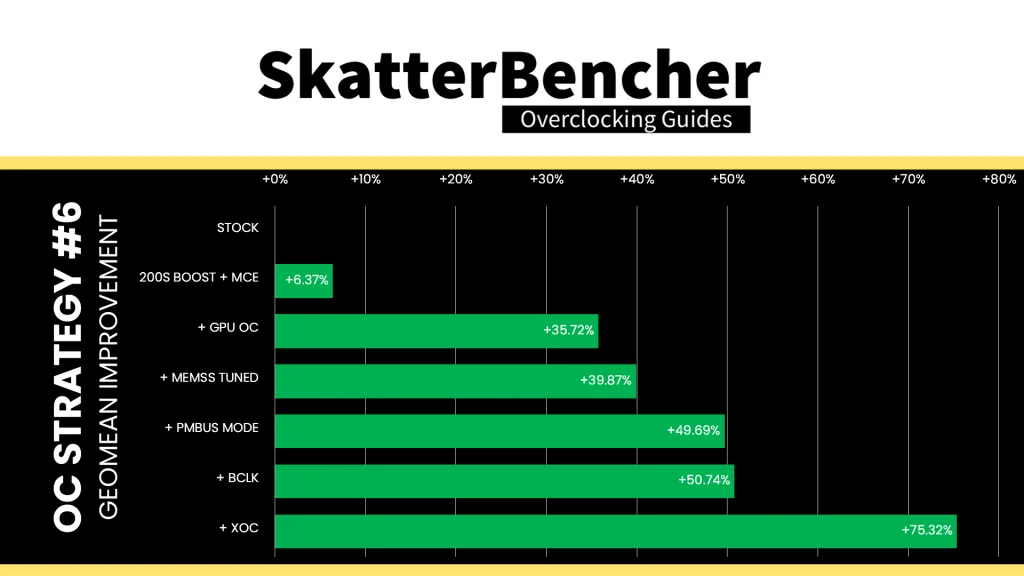

Of course, the performance improves across the board with a near doubling of performance compared to stock in Novabench. The geomean performance is twenty-five percentage points higher than our maxed out configuration with ambient cooling.

Pushing Performance Limits

Next, I also wanted to push the performance limits of the Intel Graphics by going up to 1.7V and sticking to -170°C. With the elevated voltage, I was able to pass multiple 3DMarks and Furmark at 4250 during the livestream (with validated results at 4200 and 4100 MHz respectively).

However, the performance seemed a bit off.

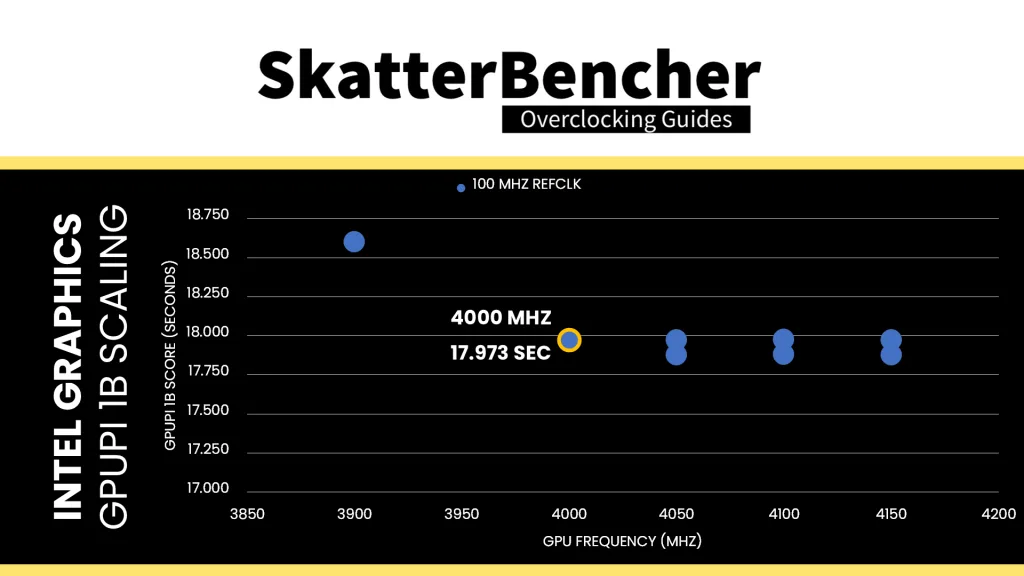

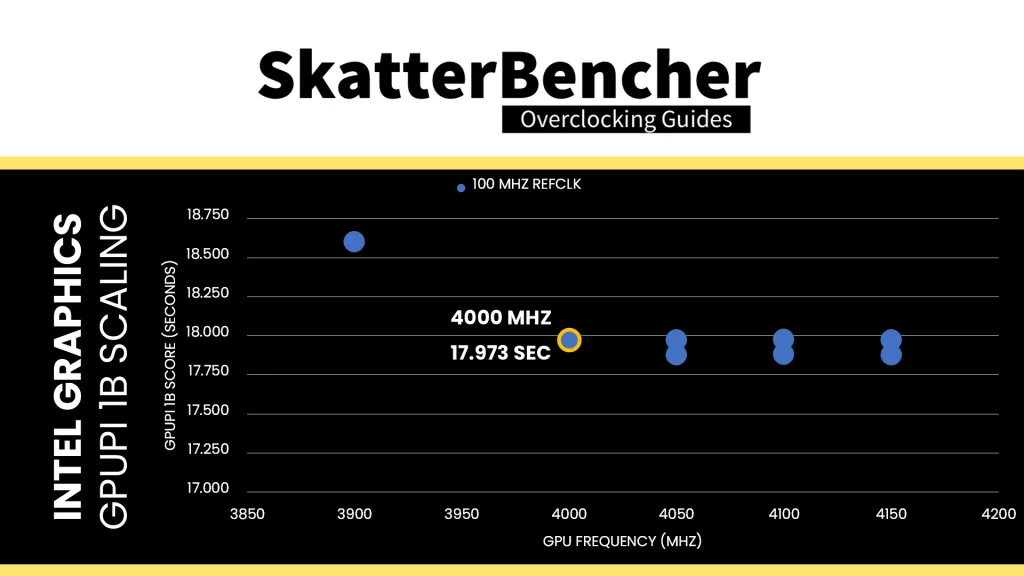

Here’s the problem: once we go above 4 GHz the performance seems to stop scaling. I observed this in multiple benchmarks:

- Furmark 1080P stops at around 2800 points

- 3DMark Speed Way stops around 650 marks, and

- GPUPI 1B gets stuck around 17.9 seconds

There’s a variety of reasons why the performance could stop scaling. For example, FLL clocking issues like we saw on Alder Lake (where the effective clock is lower than the set clock). Or a heavily bottlenecking part of the chip.

For the CPU core, we can check the effective clock in HWiNFO to see if there’s any problem with the clock frequency. However, we have no such information for the graphics. So, unfortunately, measuring benchmark performance is the only way to assess the GPU effective clock frequency.

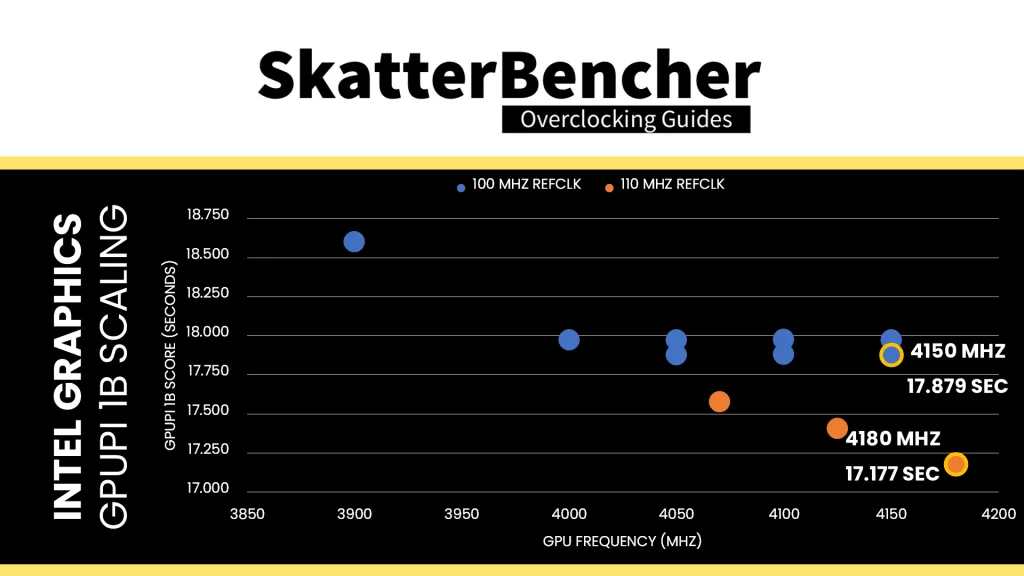

In the final strategy of my SkatterBencher guide, I noted that despite running slightly lower frequencies, the performance seemed to improve when relying on an increased reference clock. That suggests that overclocking the graphics D2D interface can impact performance.

For some reason, we didn’t get reference clock overclocking to work during our test session leading up to Computex. It would simply crash around 4 GHz. However, on stage I did get it to work, and the results were surprising!

With the standard reference clock of 100 MHz, the GPUPI 1B performance plateaus at around 4 GHz with a score of about 17.9 seconds. However, with an increased reference clock of 110 MHz, the performance scaling is back to normal, and we get 17.177 seconds at 4180 MHz. That’s an improvement of almost 10% at the same GPU clock.

That’s not spectacular, of course, but it illustrates that the performance scaling may be limited by the graphics D2D. By default, the GFX-D2D runs at 2.1 GHz and there’s no ratio control available – that’s reserved exclusively for the compute D2D on Arrow Lake. However, the D2D clock is linked to the SOC reference clock, and at 110 MHz the GFX D2D frequency is 2310 MHz.

Outro

Anyway, that’s it for our Computex 2025 adventures.

I want to thank ASUS and G.SKILL for inviting me back to show off extreme overclocking on stage and Shamino from ASUS for the help figuring out how to push the integrated graphics. He says he doesn’t like IGP overclocking, but I think this picture proves otherwise.

I want to thank my Patreon supporters and YouTube members for supporting my work. If you have any questions or comments, please drop them in the comment section below. See you next time!

bert

rdna4 need you brake a new record:

https://www.overclock.net/threads/software-removing-rdna3-rdna4-desktop-class-power-limits-and-adding-vid-offsets.1816083/page-2?post_id=29481571&nested_view=1&sortby=oldest#post-29481571

Pieter

Very interesting, thanks for sharing!